Linear vs Logistic Regression: Key Differences, Assumptions

When diving into the world of machine learning and statistical modeling, understanding regression is fundamental. But what is regression, exactly? In statistics, regression refers to a method for modeling the relationship between one or more independent variables (predictors) and a dependent variable (outcome). The regression meaning extends beyond simple prediction—it’s about understanding how variables interact and influence each other.

This comprehensive guide explores the critical distinctions between linear regression vs logistic regression, two of the most widely used regression techniques in AI and data science. Whether you’re predicting house prices or classifying emails as spam, choosing the right regression model can make or break your project.

Content

Toggle1. Understanding regression in statistics

What does regression mean in data analysis?

To define regression properly, we need to understand its core purpose: modeling relationships between variables. Regression statistics help us answer questions like “How does studying time affect exam scores?” or “What factors influence customer churn?”

The regression definition encompasses several techniques, but all share a common goal—finding the best-fitting mathematical relationship between inputs and outputs. Think of it as drawing a line (or curve) through your data points that minimizes prediction errors.

Types of regression models

Regression techniques vary based on the nature of your data and the relationships you’re modeling:

- Linear regression: Models linear relationships where the output is continuous

- Logistic regression: Handles binary or categorical outcomes using probability

- Nonlinear regression: Captures complex, curved relationships between variables

- Polynomial regression: Uses polynomial functions to model non-linear patterns (what is polynomial regression? It’s extending linear regression with terms like \(x^2\), \(x^3\), etc.)

The choice between these methods depends on your specific problem, data characteristics, and the assumptions each model requires.

Correlation and regression: What’s the difference?

Many people confuse correlation and regression, but they serve different purposes. Correlation measures the strength and direction of a linear relationship between two variables, producing a coefficient between -1 and 1. Regression, however, goes further by creating a predictive model and quantifying how changes in independent variables affect the dependent variable.

While correlation tells you that two variables move together, regression tells you by how much and provides a framework for prediction.

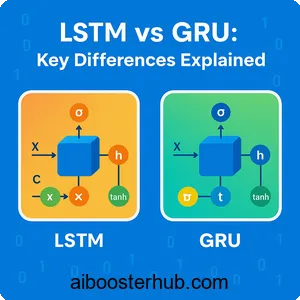

2. Linear regression explained

The linear regression model

Linear regression is the workhorse of predictive modeling. It assumes a straight-line relationship between independent variables (X) and a continuous dependent variable (y). The basic equation takes the form:

$$y = \beta_0 + \beta_1x_1 + \beta_2x_2 + … + \beta_nx_n + \epsilon$$

Where:

- \(y\) is the dependent variable (what you’re predicting)

- \(\beta_0\) is the intercept (y-value when all x’s are zero)

- \(\beta_1, \beta_2, …, \beta_n\) are regression coefficients (slopes)

- \(x_1, x_2, …, x_n\) are independent variables

- \(\epsilon\) represents the error term

The regression coefficient for each variable indicates how much y changes when that variable increases by one unit, holding all other variables constant.

Linear regression assumptions

The assumptions of linear regression are critical for ensuring your model produces valid results. Violating these assumptions can lead to biased estimates and unreliable predictions:

Linearity: The relationship between independent and dependent variables must be linear. You can check this using scatter plots or residual plots.

Independence: Observations should be independent of each other. This is particularly important in time-series data where autocorrelation can be problematic.

Homoscedasticity: The variance of residuals should be constant across all levels of the independent variables. In other words, the spread of residuals should be roughly the same everywhere.

Normality: Residuals should follow a normal distribution. This assumption is especially important for smaller sample sizes.

No multicollinearity: Independent variables should not be highly correlated with each other, as this makes it difficult to determine individual effects.

Practical example with Python

Let’s build a simple linear regression model to predict house prices based on square footage:

import numpy as np

import pandas as pd

from sklearn.linear_model import LinearRegression

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

# Generate sample data

np.random.seed(42)

square_feet = np.random.randint(800, 3500, 100)

# Price increases with square footage plus some noise

price = 150 * square_feet + np.random.normal(0, 50000, 100)

# Create DataFrame

df = pd.DataFrame({'square_feet': square_feet, 'price': price})

# Split data

X = df[['square_feet']]

y = df['price']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Train model

model = LinearRegression()

model.fit(X_train, y_train)

# Print regression coefficient

print(f"Intercept (β₀): ${model.intercept_:,.2f}")

print(f"Coefficient (β₁): ${model.coef_[0]:,.2f} per square foot")

print(f"R² Score: {model.score(X_test, y_test):.3f}")

# Make predictions

sample_sizes = np.array([[1000], [2000], [3000]])

predictions = model.predict(sample_sizes)

for size, pred in zip(sample_sizes, predictions):

print(f"Predicted price for {size[0]} sq ft: ${pred:,.2f}")

This code demonstrates how linear regression creates a predictive equation. The regression coefficient tells us the price increase per square foot, making interpretation straightforward.

3. Logistic regression explained

The logistic regression model

Despite its name, logistic regression is a classification algorithm, not a regression technique in the traditional sense. It predicts the probability that an observation belongs to a particular category, making it perfect for binary outcomes like yes/no, pass/fail, or spam/not spam.

The logistic regression equation uses the logistic (sigmoid) function to transform a linear combination of inputs into a probability between 0 and 1:

$$P(y=1|X) = \frac{1}{1 + e^{-(\beta_0 + \beta_1x_1 + … + \beta_nx_n)}}$$

Or equivalently, using the logit transformation:

$$\log\left(\frac{P}{1-P}\right) = \beta_0 + \beta_1x_1 + … + \beta_nx_n$$

This log-odds form is called the logit, and it transforms the probability into a value that can range from negative to positive infinity.

Key characteristics of logistic regression

Output range: Unlike linear regression which can predict any continuous value, logistic regression outputs are bounded between 0 and 1, representing probabilities.

Decision boundary: Logistic regression creates a decision boundary (typically at 0.5 probability) to classify observations into categories.

Maximum likelihood estimation: Instead of minimizing squared errors like linear regression, logistic regression uses maximum likelihood estimation to find the best-fitting parameters.

Interpreting logistic regression coefficients

The regression coefficient in logistic regression has a different interpretation than in linear models. A positive coefficient means that as the predictor increases, the log-odds (and thus probability) of the positive class increases.

More specifically, \(e^{\beta_1}\) represents the odds ratio—the multiplicative change in odds for a one-unit increase in the predictor.

Practical example with Python

Let’s build a logistic regression model to predict whether a student passes an exam based on study hours:

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score, confusion_matrix, classification_report

# Generate sample data

np.random.seed(42)

study_hours = np.random.uniform(0, 10, 200)

# Probability of passing increases with study hours

prob_pass = 1 / (1 + np.exp(-(study_hours - 5)))

passed = (np.random.random(200) < prob_pass).astype(int)

df = pd.DataFrame({'study_hours': study_hours, 'passed': passed})

# Split data

X = df[['study_hours']]

y = df['passed']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Train model

log_model = LogisticRegression()

log_model.fit(X_train, y_train)

# Model coefficients

print(f"Intercept: {log_model.intercept_[0]:.3f}")

print(f"Coefficient: {log_model.coef_[0][0]:.3f}")

# Predictions

y_pred = log_model.predict(X_test)

print(f"\nAccuracy: {accuracy_score(y_test, y_pred):.3f}")

# Predict probabilities for different study hours

test_hours = np.array([[2], [5], [8]])

probabilities = log_model.predict_proba(test_hours)

for hours, prob in zip(test_hours, probabilities):

print(f"\n{hours[0]} hours studied:")

print(f" Probability of failing: {prob[0]:.3f}")

print(f" Probability of passing: {prob[1]:.3f}")

Notice how logistic regression provides probabilities rather than direct predictions, giving you more nuanced information about classification confidence.

4. Linear regression vs logistic regression: Key differences

Dependent variable type

The most fundamental difference in logistic regression vs linear regression lies in what they predict:

Linear regression predicts continuous numerical outcomes. Examples include predicting temperature, stock prices, customer lifetime value, or any measurement on a continuous scale. The output can theoretically range from negative infinity to positive infinity.

Logistic regression predicts categorical outcomes, most commonly binary (two classes). Examples include predicting disease presence/absence, customer churn yes/no, or email spam/legitimate. The output is a probability between 0 and 1.

Mathematical function

Linear regression uses a simple linear function that creates a straight line (or hyperplane in multiple dimensions). The relationship between inputs and outputs is additive and direct.

Logistic regression applies the sigmoid function to a linear combination of inputs, creating an S-shaped curve. This transformation ensures outputs stay within the 0-1 probability range.

Error metrics and loss functions

Linear regression typically uses:

- Mean Squared Error (MSE): \(\frac{1}{n}\sum_{i=1}^{n}(y_i – \hat{y}_i)^2\)

- Root Mean Squared Error (RMSE)

- Mean Absolute Error (MAE)

- R-squared for goodness of fit

Logistic regression typically uses:

- Log loss (cross-entropy)

- Accuracy, precision, recall, F1-score

- ROC curve and AUC

- Confusion matrix metrics

Interpretation of results

A regression table for linear regression shows how much the dependent variable changes for each unit change in predictors. If your coefficient is 50, the output increases by 50 units when that predictor increases by 1.

In logistic regression, coefficients represent changes in log-odds, which is less intuitive. You often need to exponentiate coefficients to get odds ratios for proper interpretation.

Visual comparison

import matplotlib.pyplot as plt

# Create sample data

x = np.linspace(0, 10, 100)

linear_y = 2 + 3 * x

logistic_y = 1 / (1 + np.exp(-(x - 5)))

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 4))

# Linear regression

ax1.plot(x, linear_y, 'b-', linewidth=2)

ax1.set_xlabel('Independent Variable (X)')

ax1.set_ylabel('Dependent Variable (y)')

ax1.set_title('Linear Regression\n(Continuous Output)')

ax1.grid(True, alpha=0.3)

# Logistic regression

ax2.plot(x, logistic_y, 'r-', linewidth=2)

ax2.axhline(y=0.5, color='k', linestyle='--', alpha=0.5, label='Decision boundary')

ax2.set_xlabel('Independent Variable (X)')

ax2.set_ylabel('Probability P(y=1)')

ax2.set_title('Logistic Regression\n(Probability Output)')

ax2.legend()

ax2.grid(True, alpha=0.3)

ax2.set_ylim(-0.1, 1.1)

plt.tight_layout()

plt.savefig('linear_vs_logistic.png', dpi=150, bbox_inches='tight')

5. When to use linear vs logistic regression

Use linear regression when:

Your outcome is continuous: If you’re predicting things like sales revenue, temperature, distance, time, or any measurement on a continuous scale, linear regression is your go-to choice.

You need interpretable relationships: Linear models provide straightforward interpretation—each coefficient directly tells you the change in outcome per unit change in the predictor.

The relationship is approximately linear: Check scatter plots of your predictors versus the outcome. If you see roughly straight-line patterns, linear regression will work well.

Example scenarios:

- Predicting house prices based on features

- Estimating energy consumption from weather data

- Forecasting sales based on advertising spend

- Modeling crop yield from fertilizer amounts

Use logistic regression when:

Your outcome is categorical: When you need to classify observations into categories (especially binary: yes/no, true/false, 0/1), logistic regression is appropriate.

You need probability estimates: Beyond simple classification, logistic regression gives you probability scores, which are valuable for ranking, threshold tuning, and understanding prediction confidence.

You’re solving a classification problem: Any task involving categorization, from medical diagnosis to fraud detection to spam filtering.

Example scenarios:

- Predicting customer churn (will churn or won’t churn)

- Diagnosing disease presence (positive or negative)

- Classifying loan default risk (will default or won’t default)

- Determining email spam (spam or legitimate)

What about nonlinear relationships?

When your data shows curved patterns, neither standard linear nor logistic regression may be sufficient. This is where nonlinear regression comes in. You have several options:

Polynomial regression: Transform your features by adding polynomial terms (\(x^2\), \(x^3\), etc.). This extends linear regression to capture curves while maintaining many of its nice properties.

Generalized additive models (GAMs): Allow flexible, non-parametric relationships while maintaining interpretability.

Tree-based methods or neural networks: For highly complex, non-linear relationships that can’t be captured by simple transformations.

from sklearn.preprocessing import PolynomialFeatures

from sklearn.pipeline import make_pipeline

# Polynomial regression example

X = np.linspace(0, 10, 100).reshape(-1, 1)

y = 0.5 * X.ravel()**2 - 5 * X.ravel() + 25 + np.random.normal(0, 5, 100)

# Create polynomial features and fit model

degree = 2

poly_model = make_pipeline(PolynomialFeatures(degree), LinearRegression())

poly_model.fit(X, y)

# This creates a model: y = β₀ + β₁x + β₂x²

print(f"R² Score: {poly_model.score(X, y):.3f}")

6. Assumptions and diagnostics

Checking linear regression assumptions

Properly validating the assumptions of linear regression is essential for trustworthy results. Here’s how to check each:

Linearity check: Plot predictors against the outcome and look for linear patterns. Alternatively, plot residuals versus fitted values—you should see random scatter around zero.

Independence check: Ensure your data collection process doesn’t introduce dependencies. For time-series data, check for autocorrelation using the Durbin-Watson test.

Homoscedasticity check: In a residual vs. fitted plot, the vertical spread should be consistent. A funnel shape indicates heteroscedasticity (violation).

Normality check: Create a Q-Q plot of residuals or use the Shapiro-Wilk test. For large samples, the central limit theorem makes this less critical.

Multicollinearity check: Calculate Variance Inflation Factors (VIF). Values above 5-10 suggest problematic multicollinearity.

from scipy import stats

import seaborn as sns

# Diagnostic plots for linear regression

def diagnose_linear_regression(model, X, y):

predictions = model.predict(X)

residuals = y - predictions

fig, axes = plt.subplots(2, 2, figsize=(12, 10))

# Residuals vs Fitted

axes[0, 0].scatter(predictions, residuals, alpha=0.5)

axes[0, 0].axhline(y=0, color='r', linestyle='--')

axes[0, 0].set_xlabel('Fitted values')

axes[0, 0].set_ylabel('Residuals')

axes[0, 0].set_title('Residuals vs Fitted')

# Q-Q plot

stats.probplot(residuals, dist="norm", plot=axes[0, 1])

axes[0, 1].set_title('Normal Q-Q Plot')

# Scale-Location plot

standardized_residuals = np.sqrt(np.abs(residuals / np.std(residuals)))

axes[1, 0].scatter(predictions, standardized_residuals, alpha=0.5)

axes[1, 0].set_xlabel('Fitted values')

axes[1, 0].set_ylabel('√|Standardized residuals|')

axes[1, 0].set_title('Scale-Location')

# Residuals histogram

axes[1, 1].hist(residuals, bins=30, edgecolor='black')

axes[1, 1].set_xlabel('Residuals')

axes[1, 1].set_ylabel('Frequency')

axes[1, 1].set_title('Residual Distribution')

plt.tight_layout()

return fig

Logistic regression assumptions

Logistic regression has fewer assumptions than linear regression, but they’re still important:

Binary outcome: For standard logistic regression, the dependent variable should have two categories. Extensions exist for multiple categories (multinomial logistic regression).

Independence of observations: Each observation should be independent, similar to linear regression.

Linearity of logit: The log-odds should have a linear relationship with continuous predictors. Check this by plotting log-odds versus predictors.

No perfect multicollinearity: While some correlation is acceptable, predictors shouldn’t be perfectly correlated.

Large sample size: Logistic regression requires sufficient observations in each outcome category for reliable estimates. A rule of thumb is at least 10 events per predictor variable.

7. Advanced considerations

Regularization techniques

Both linear and logistic regression can benefit from regularization to prevent overfitting:

Ridge regression (L2): Adds penalty proportional to the square of coefficients: \(\sum\beta_i^2\). This shrinks coefficients but keeps all variables in the model.

Lasso regression (L1): Adds penalty proportional to the absolute value of coefficients: \(\sum|\beta_i|\). This can shrink coefficients to exactly zero, performing feature selection.

Elastic Net: Combines L1 and L2 penalties for a balance between Ridge and Lasso.

from sklearn.linear_model import Ridge, Lasso, ElasticNet

# Example with regularization

ridge_model = Ridge(alpha=1.0)

lasso_model = Lasso(alpha=0.1)

elastic_model = ElasticNet(alpha=0.1, l1_ratio=0.5)

# Fit models

ridge_model.fit(X_train, y_train)

lasso_model.fit(X_train, y_train)

elastic_model.fit(X_train, y_train)

print("Ridge coefficients:", ridge_model.coef_)

print("Lasso coefficients:", lasso_model.coef_)

print("Elastic Net coefficients:", elastic_model.coef_)

Feature engineering and transformations

Sometimes the raw form of your data doesn’t meet regression assumptions. Consider these transformations:

Log transformation: For right-skewed data, using \(\log(y)\) or \(\log(x)\) can linearize relationships and stabilize variance.

Square root or cube root: Alternative transformations for non-negative data.

Interaction terms: Multiply two predictors to capture how their combined effect differs from their individual effects.

Binning continuous variables: Sometimes converting continuous variables to categories improves model stability, though at the cost of information loss.

Model evaluation and selection

For linear regression:

- Cross-validation to estimate out-of-sample performance

- Adjusted R² to account for model complexity

- AIC/BIC for comparing models with different numbers of predictors

- Residual standard error for prediction interval estimation

For logistic regression:

- Cross-validation with stratified sampling

- ROC curves to evaluate classification performance across thresholds

- Calibration plots to check if predicted probabilities match actual frequencies

- Precision-recall curves for imbalanced datasets

from sklearn.model_selection import cross_val_score

from sklearn.metrics import roc_curve, auc, roc_auc_score

# Cross-validation for linear regression

cv_scores = cross_val_score(model, X, y, cv=5, scoring='r2')

print(f"Cross-validated R² scores: {cv_scores}")

print(f"Mean R²: {cv_scores.mean():.3f} (+/- {cv_scores.std() * 2:.3f})")

# ROC curve for logistic regression

y_probs = log_model.predict_proba(X_test)[:, 1]

fpr, tpr, thresholds = roc_curve(y_test, y_probs)

roc_auc = auc(fpr, tpr)

plt.figure(figsize=(8, 6))

plt.plot(fpr, tpr, color='darkorange', lw=2,

label=f'ROC curve (AUC = {roc_auc:.2f})')

plt.plot([0, 1], [0, 1], color='navy', lw=2, linestyle='--')

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.05])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('Receiver Operating Characteristic (ROC) Curve')

plt.legend(loc="lower right")

plt.grid(True, alpha=0.3)

8. Knowledge Check

Quiz 1: The Core Purpose of Regression

Quiz 2: The Linear Regression Model Equation

y) represent? y) represents the continuous numerical outcome that the model aims to predict.