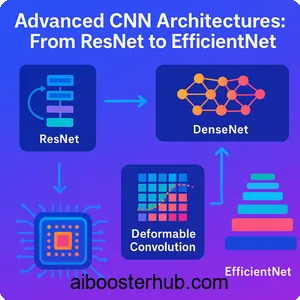

Advanced CNN Architectures: From ResNet to EfficientNet

The evolution of convolutional neural networks has been nothing short of revolutionary. From the early days of LeNet to the sophisticated architectures we use today, CNN models have transformed how machines perceive and understand visual information.

In this comprehensive guide, we’ll explore the cutting-edge architectures that have pushed the boundaries of deep neural networks, including residual networks, densely connected convolutional networks, deformable convolutional networks, and the game-changing EfficientNet.

Content

Toggle1. The foundation: Understanding modern neural network architecture challenges

The Vanishing Gradient Problem

Before diving into advanced architectures, it’s essential to understand the challenges that drove their development. Traditional deep neural networks face several fundamental problems as they grow deeper. The vanishing gradient problem becomes increasingly severe with depth, making it difficult to train networks with many layers effectively. As gradients backpropagate through numerous layers, they can become exponentially small, causing the early layers to learn extremely slowly or not at all.

The Degradation Problem

Another critical challenge is the degradation problem. Counterintuitively, researchers discovered that simply adding more layers to a network doesn’t always improve performance. In fact, deeper networks sometimes perform worse than their shallower counterparts, even on training data. This isn’t due to overfitting but rather to optimization difficulties.

Computational Efficiency Challenges

Computational efficiency presents yet another hurdle. As CNN models become more sophisticated and accurate, they also become more resource-intensive. This creates a significant barrier for deployment on mobile devices and edge computing platforms, where memory and processing power are limited. The challenge isn’t just about achieving high accuracy—it’s about finding the optimal balance between model performance and computational requirements.

2. ResNet: Revolutionary skip connections

ResNet introduced a paradigm shift in neural network architecture design through the concept of residual learning. Instead of learning the desired underlying mapping directly, residual networks learn the residual mapping—the difference between the input and the desired output. This seemingly simple change had profound implications for training deep neural networks.

The residual block architecture

The core innovation of ResNet lies in skip connections, also called shortcut connections. These connections allow the input to bypass one or more layers and be added directly to the output of those layers. Mathematically, if we denote the input as \(x\) and the desired underlying mapping as \(H(x)\), traditional networks learn \(H(x)\) directly. ResNet instead learns the residual function:

$$F(x) = H(x) – x$$

The output then becomes:

$$y = F(x) + x$$

This formulation makes it easier for the network to learn identity mappings when needed. If an identity mapping is optimal, the network can simply drive the residual \(F(x)\) to zero, which is much easier than forcing multiple nonlinear layers to learn an identity function.

Implementation and practical benefits

Here’s a practical implementation of a residual block in Python using PyTorch:

import torch

import torch.nn as nn

class ResidualBlock(nn.Module):

def __init__(self, in_channels, out_channels, stride=1):

super(ResidualBlock, self).__init__()

# Main path

self.conv1 = nn.Conv2d(in_channels, out_channels,

kernel_size=3, stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channels)

self.relu = nn.ReLU(inplace=True)

self.conv2 = nn.Conv2d(out_channels, out_channels,

kernel_size=3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channels)

# Skip connection

self.shortcut = nn.Sequential()

if stride != 1 or in_channels != out_channels:

self.shortcut = nn.Sequential(

nn.Conv2d(in_channels, out_channels,

kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(out_channels)

)

def forward(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

# Add skip connection

out += self.shortcut(identity)

out = self.relu(out)

return out

The power of ResNet lies in its ability to train extremely deep networks—even networks with over 100 layers—without suffering from degradation. This enables the creation of models that can learn more complex features and achieve superior performance on challenging tasks like ImageNet classification.

3. DenseNet: Densely connected convolutional networks

While ResNet demonstrated the power of skip connections, DenseNet took this concept further by connecting each layer to every other layer in a feed-forward fashion. This architecture, known as densely connected convolutional networks, creates an intricate web of connections that maximizes information flow throughout the network.

Dense connectivity pattern

In DenseNet, each layer receives feature maps from all preceding layers and passes its own feature maps to all subsequent layers. For a network with \(L\) layers, there are \(\frac{L(L+1)}{2}\) connections instead of just \(L\) as in traditional architectures. The \(l\)-th layer receives the concatenation of feature maps from all previous layers:

$$x_l = H_l([x_0, x_1, …, x_{l-1}])$$

where \([x_0, x_1, …, x_{l-1}]\) represents the concatenation of feature maps produced in layers \(0, …, l-1\), and \(H_l\) represents a composite function of operations (batch normalization, ReLU, and convolution).

Growth rate and efficiency

A key hyperparameter in DenseNet is the growth rate \(k\), which defines how many feature maps each layer adds to the “collective knowledge” of the network. Even with a small growth rate like \(k=12\), DenseNet can achieve excellent results because each layer has access to all preceding feature maps.

Here’s how to implement a dense block:

class DenseLayer(nn.Module):

def __init__(self, in_channels, growth_rate):

super(DenseLayer, self).__init__()

self.bn1 = nn.BatchNorm2d(in_channels)

self.relu = nn.ReLU(inplace=True)

self.conv1 = nn.Conv2d(in_channels, 4 * growth_rate,

kernel_size=1, bias=False)

self.bn2 = nn.BatchNorm2d(4 * growth_rate)

self.conv2 = nn.Conv2d(4 * growth_rate, growth_rate,

kernel_size=3, padding=1, bias=False)

def forward(self, x):

out = self.conv1(self.relu(self.bn1(x)))

out = self.conv2(self.relu(self.bn2(out)))

return torch.cat([x, out], 1) # Concatenate along channel dimension

class DenseBlock(nn.Module):

def __init__(self, num_layers, in_channels, growth_rate):

super(DenseBlock, self).__init__()

self.layers = nn.ModuleList()

for i in range(num_layers):

self.layers.append(

DenseLayer(in_channels + i * growth_rate, growth_rate)

)

def forward(self, x):

for layer in self.layers:

x = layer(x)

return x

DenseNet offers several advantages over traditional architectures. It alleviates the vanishing gradient problem by providing shorter paths between early and late layers. It encourages feature reuse, making the network more parameter-efficient. Additionally, each layer receives “collective knowledge” from all previous layers, enabling better gradient flow during training.

4. Deformable convolutional networks: Adaptive spatial sampling

Traditional CNN models apply fixed geometric transformations regardless of the input content. Deformable convolutional networks revolutionized this by introducing learnable offsets that allow the network to adapt its receptive field based on the input. This makes the architecture particularly powerful for tasks involving geometric transformations, such as object detection and semantic segmentation.

Understanding deformable convolutions

In standard convolution, sampling locations are fixed in a regular grid. For a 3×3 convolution, the sampling grid \(\mathcal{R}\) is defined as:

$$\mathcal{R} = {(-1,-1), (-1,0), …, (0,1), (1,1)}$$

Deformable convolutions augment this regular grid with learnable offsets \({\Delta p_n | n = 1, …, N}\), where \(N = |\mathcal{R}|\). The output at position \(p_0\) becomes:

$$y(p_0) = \sum_{p_n \in \mathcal{R}} w(p_n) \cdot x(p_0 + p_n + \Delta p_n)$$

The offsets \(\Delta p_n\) are learned through an additional convolutional layer applied to the same input feature map. Since these offsets are typically fractional, bilinear interpolation is used to compute the input feature values at non-integer locations.

Practical implementation

Here’s a simplified implementation showing the concept:

class DeformableConv2d(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size=3, padding=1):

super(DeformableConv2d, self).__init__()

# Regular convolution

self.conv = nn.Conv2d(in_channels, out_channels,

kernel_size=kernel_size, padding=padding, bias=False)

# Offset prediction layer (predicts 2N offsets for N sampling locations)

self.offset_conv = nn.Conv2d(in_channels, 2 * kernel_size * kernel_size,

kernel_size=kernel_size, padding=padding)

# Initialize offset weights to zero for stability

nn.init.constant_(self.offset_conv.weight, 0)

nn.init.constant_(self.offset_conv.bias, 0)

def forward(self, x):

# Predict offsets

offset = self.offset_conv(x)

# Apply deformable convolution (simplified)

# In practice, this requires custom CUDA kernels for efficiency

# This is a conceptual representation

output = self.deform_conv(x, offset)

return output

Deformable convolutional networks excel in scenarios where objects appear at various scales, poses, and viewpoints. The adaptive sampling mechanism allows the network to focus on relevant regions and adjust to the geometric variations in the input. This makes them particularly effective for object detection, where bounding boxes need to accurately capture objects of different shapes and sizes.

5. MobileNets: Efficient convolutional networks for mobile vision applications

The demand for deploying deep neural networks on mobile and embedded devices led to the development of MobileNets. These architectures prioritize efficiency without sacrificing too much accuracy, making them ideal for mobilenets efficient convolutional networks for mobile vision applications where computational resources are constrained.

Depthwise separable convolutions

The key innovation in MobileNets is the use of depthwise separable convolutions, which factorize a standard convolution into two separate operations: depthwise convolution and pointwise convolution. This dramatically reduces computational cost and model size.

A standard convolution with kernel size \(D_K \times D_K\), input channels \(M\), output channels \(N\), and feature map size \(D_F \times D_F\) requires:

$$D_K \cdot D_K \cdot M \cdot N \cdot D_F \cdot D_F$$

operations. Depthwise separable convolution splits this into:

- Depthwise convolution: Applies a single filter per input channel

- Pointwise convolution: Uses 1×1 convolutions to combine outputs

The total cost becomes:

$$D_K \cdot D_K \cdot M \cdot D_F \cdot D_F + M \cdot N \cdot D_F \cdot D_F$$

The computational reduction factor is:

$$\frac{1}{N} + \frac{1}{D_K^2}$$

For a 3×3 kernel, this results in an 8-9× reduction in computation.

Implementation example

class DepthwiseSeparableConv(nn.Module):

def __init__(self, in_channels, out_channels, stride=1):

super(DepthwiseSeparableConv, self).__init__()

# Depthwise convolution

self.depthwise = nn.Conv2d(in_channels, in_channels,

kernel_size=3, stride=stride,

padding=1, groups=in_channels, bias=False)

self.bn1 = nn.BatchNorm2d(in_channels)

self.relu1 = nn.ReLU(inplace=True)

# Pointwise convolution

self.pointwise = nn.Conv2d(in_channels, out_channels,

kernel_size=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channels)

self.relu2 = nn.ReLU(inplace=True)

def forward(self, x):

x = self.depthwise(x)

x = self.bn1(x)

x = self.relu1(x)

x = self.pointwise(x)

x = self.bn2(x)

x = self.relu2(x)

return x

class MobileNetV1(nn.Module):

def __init__(self, num_classes=1000, width_multiplier=1.0):

super(MobileNetV1, self).__init__()

# First standard convolution

self.conv1 = nn.Conv2d(3, int(32 * width_multiplier),

kernel_size=3, stride=2, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(int(32 * width_multiplier))

self.relu = nn.ReLU(inplace=True)

# Depthwise separable convolutions

self.layers = self._make_layers(width_multiplier)

# Classifier

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.fc = nn.Linear(int(1024 * width_multiplier), num_classes)

def _make_layers(self, alpha):

# Define layer configurations

# Format: (out_channels, stride)

configs = [

(64, 1), (128, 2), (128, 1), (256, 2), (256, 1),

(512, 2), (512, 1), (512, 1), (512, 1), (512, 1),

(512, 1), (1024, 2), (1024, 1)

]

layers = []

in_channels = int(32 * alpha)

for out_channels, stride in configs:

out_channels = int(out_channels * alpha)

layers.append(DepthwiseSeparableConv(in_channels, out_channels, stride))

in_channels = out_channels

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.layers(x)

x = self.avg_pool(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

return x

MobileNets also introduce width and resolution multipliers as hyperparameters, allowing practitioners to trade off between accuracy and efficiency based on their specific requirements. This flexibility makes MobileNets extremely versatile for various deployment scenarios.

6. EfficientNet: Rethinking model scaling for convolutional neural networks

EfficientNet represents a paradigm shift in how we think about scaling CNN models. Rather than arbitrarily scaling depth, width, or resolution, EfficientNet rethinking model scaling for convolutional neural networks introduced a principled approach to compound scaling that balances all three dimensions simultaneously.

Compound scaling methodology

Traditional scaling approaches typically increase only one dimension—either network depth (number of layers), width (number of channels), or input resolution. However, EfficientNet demonstrated that balanced scaling of all three dimensions yields better performance and efficiency. The compound scaling method uses a compound coefficient \(\phi\) to uniformly scale all dimensions:

$$\text{depth: } d = \alpha^\phi$$ $$\text{width: } w = \beta^\phi$$ $$\text{resolution: } r = \gamma^\phi$$

subject to the constraint:

$$\alpha \cdot \beta^2 \cdot \gamma^2 \approx 2$$

and \(\alpha \geq 1, \beta \geq 1, \gamma \geq 1\)

This constraint ensures that for any new \(\phi\), the total computational cost increases by approximately \(2^\phi\). The intuition is that if we increase input resolution, we need more layers (depth) to capture fine-grained patterns and more channels (width) to capture more complex features at higher resolution.

Neural architecture search and mobile inverted bottleneck

EfficientNet builds upon the mobile inverted bottleneck (MBConv) block, which combines the efficiency of depthwise separable convolutions with the benefits of inverted residual connections. The base architecture, EfficientNet-B0, was discovered through neural architecture search, optimizing for both accuracy and efficiency.

Here’s an implementation of the MBConv block used in EfficientNet:

class MBConvBlock(nn.Module):

def __init__(self, in_channels, out_channels, expand_ratio,

kernel_size, stride, se_ratio=0.25):

super(MBConvBlock, self).__init__()

self.stride = stride

self.use_residual = (stride == 1 and in_channels == out_channels)

hidden_dim = in_channels * expand_ratio

# Expansion phase

self.expand_conv = nn.Conv2d(in_channels, hidden_dim, 1, bias=False) \

if expand_ratio != 1 else nn.Identity()

self.bn1 = nn.BatchNorm2d(hidden_dim) if expand_ratio != 1 else nn.Identity()

# Depthwise convolution

self.depthwise = nn.Conv2d(hidden_dim, hidden_dim, kernel_size,

stride=stride, padding=kernel_size//2,

groups=hidden_dim, bias=False)

self.bn2 = nn.BatchNorm2d(hidden_dim)

# Squeeze-and-Excitation

se_channels = max(1, int(in_channels * se_ratio))

self.se_reduce = nn.Conv2d(hidden_dim, se_channels, 1)

self.se_expand = nn.Conv2d(se_channels, hidden_dim, 1)

# Projection phase

self.project = nn.Conv2d(hidden_dim, out_channels, 1, bias=False)

self.bn3 = nn.BatchNorm2d(out_channels)

self.swish = nn.SiLU()

def forward(self, x):

identity = x

# Expansion

out = self.expand_conv(x)

out = self.bn1(out)

out = self.swish(out)

# Depthwise

out = self.depthwise(out)

out = self.bn2(out)

out = self.swish(out)

# Squeeze-and-Excitation

se_out = torch.mean(out, dim=[2, 3], keepdim=True)

se_out = self.swish(self.se_reduce(se_out))

se_out = torch.sigmoid(self.se_expand(se_out))

out = out * se_out

# Projection

out = self.project(out)

out = self.bn3(out)

# Residual connection

if self.use_residual:

out = out + identity

return out

Performance and scalability

EfficientNet achieves state-of-the-art accuracy with significantly fewer parameters and FLOPs compared to previous architectures. EfficientNet-B0 uses 5.3M parameters, while EfficientNet-B7 scales up to 66M parameters. The compound scaling method ensures that each variant maintains optimal efficiency at its respective scale.

The architecture family demonstrates that systematic scaling is more effective than arbitrary increases in model capacity. This insight has influenced subsequent neural network architecture designs and established new best practices for model development.

7. Practical considerations and choosing the right architecture

Choosing the Right Architecture

Selecting the appropriate neural network architecture depends on your specific requirements, constraints, and deployment environment. Each architecture we’ve discussed offers unique advantages for different scenarios.

High-Performance Architectures

For applications requiring maximum accuracy with ample computational resources, ResNet and DenseNet remain excellent choices. ResNet’s deep variants (ResNet-50, ResNet-101, ResNet-152) provide exceptional performance on challenging computer vision tasks. DenseNet offers similar accuracy with better parameter efficiency due to its dense connectivity pattern.

Lightweight and Efficient Models

When deployment constraints are critical, such as mobile applications or edge devices, MobileNets and EfficientNet are the go-to options. MobileNets excel in scenarios where minimal latency and small model size are paramount. EfficientNet provides the best accuracy-efficiency trade-off when you can afford slightly more computation than MobileNets but still need practical deployment.

Handling Complex Transformations

For tasks involving geometric transformations, significant scale variations, or when objects appear in unusual poses, deformable convolutional networks offer unique capabilities. Their adaptive sampling mechanism provides flexibility that traditional convolutions cannot match, making them particularly valuable for object detection and instance segmentation tasks.

Here’s a practical comparison of implementing these architectures:

import torch

import torch.nn as nn

# Example: Building a hybrid architecture

class HybridVisionModel(nn.Module):

def __init__(self, num_classes=1000, use_efficient=True):

super(HybridVisionModel, self).__init__()

if use_efficient:

# Start with efficient stem

self.stem = nn.Sequential(

nn.Conv2d(3, 32, 3, stride=2, padding=1, bias=False),

nn.BatchNorm2d(32),

nn.SiLU()

)

# Use MBConv blocks

self.features = nn.Sequential(

MBConvBlock(32, 16, expand_ratio=1, kernel_size=3, stride=1),

MBConvBlock(16, 24, expand_ratio=6, kernel_size=3, stride=2),

MBConvBlock(24, 40, expand_ratio=6, kernel_size=5, stride=2),

)

else:

# Use residual blocks

self.stem = nn.Sequential(

nn.Conv2d(3, 64, 7, stride=2, padding=3, bias=False),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.MaxPool2d(3, stride=2, padding=1)

)

self.features = nn.Sequential(

ResidualBlock(64, 64),

ResidualBlock(64, 128, stride=2),

ResidualBlock(128, 256, stride=2),

)

self.classifier = nn.Sequential(

nn.AdaptiveAvgPool2d(1),

nn.Flatten(),

nn.Dropout(0.2),

nn.Linear(40 if use_efficient else 256, num_classes)

)

def forward(self, x):

x = self.stem(x)

x = self.features(x)

x = self.classifier(x)

return x

# Instantiate different variants

efficient_model = HybridVisionModel(use_efficient=True)

resnet_model = HybridVisionModel(use_efficient=False)

# Compare parameter counts

efficient_params = sum(p.numel() for p in efficient_model.parameters())

resnet_params = sum(p.numel() for p in resnet_model.parameters())

print(f"Efficient variant: {efficient_params:,} parameters")

print(f"ResNet variant: {resnet_params:,} parameters")

When optimizing your model, consider using techniques like knowledge distillation to transfer knowledge from larger models to smaller ones, or pruning to remove unnecessary connections. These methods can help you achieve better efficiency without training from scratch.

8. Knowledge Check

Quiz 1: Foundational CNN Challenges

Quiz 2: ResNet’s Core Innovation

F(x) = H(x) – x), with the block’s final output becoming y = F(x) + x. This makes it easier for the network to learn identity mappings by simply driving the residual (F(x)) to zero, which helps overcome the degradation problem.Quiz 3: DenseNet’s Connectivity Pattern

Quiz 4: Deformable Convolutions’ Adaptive Mechanism

Quiz 5: MobileNets’ Efficiency Technique

Quiz 6: EfficientNet’s Scaling Methodology

ϕ) to uniformly and simultaneously scale all three dimensions of the network: depth (number of layers), width (number of channels), and resolution (input image size). This ensures a balanced approach to increasing model capacity, leading to better performance and efficiency.Quiz 7: Feature Integration in ResNet vs. DenseNet

Quiz 8: The Building Block of EfficientNet

Quiz 9: Architectures for Resource-Constrained Environments

Quiz 10: The Role of “Growth Rate” in DenseNet

k)?k) is a key hyperparameter in DenseNet that defines the number of new feature maps each layer produces. These new maps are then concatenated with the feature maps from all preceding layers, directly increasing the amount of information available to all subsequent layers.