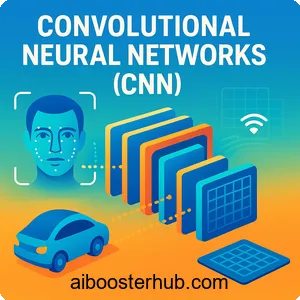

Convolutional Neural Networks (CNN): Architecture & Applications

In the realm of artificial intelligence and deep learning, convolutional neural networks have revolutionized how machines perceive and understand visual information. From facial recognition systems to autonomous vehicles, CNN technology powers some of the most transformative applications of our time. This comprehensive guide explores the architecture, mechanics, and real-world applications of convolutional neural networks, providing you with a solid foundation in this essential deep learning technique.

Content

Toggle1. What is a convolutional neural network?

A convolutional neural network (CNN or ConvNet) is a specialized type of artificial neural network designed primarily for processing structured grid data, particularly images. Unlike traditional neural networks that treat input data as flat vectors, CNN architectures preserve the spatial relationship between pixels, making them exceptionally effective for visual recognition tasks.

The key innovation of convolutional neural networks lies in their ability to automatically learn hierarchical features from raw pixel data. While a traditional neural network might struggle to identify a cat in different positions, lighting conditions, or orientations, a CNN can learn to recognize the fundamental features—edges, textures, shapes—that define a cat regardless of these variations.

Why CNNs are different

Traditional fully connected neural networks face significant challenges when working with images. A modest 224×224 pixel color image contains 150,528 input values (224 × 224 × 3 color channels). Connecting this to even a small hidden layer of 1,000 neurons would require over 150 million parameters, making the network computationally prohibitive and prone to overfitting.

CNNs address this problem through three key principles:

Local connectivity: Each neuron connects only to a small region of the input, called a receptive field, rather than to all pixels. This dramatically reduces the number of parameters while capturing local patterns.

Parameter sharing: The same set of weights (called a filter or kernel) is applied across the entire image. This means the network learns to detect a feature—like an edge or corner—once and can recognize it anywhere in the image.

Spatial hierarchy: CNNs stack multiple layers, allowing them to learn increasingly complex features. Early layers detect simple patterns like edges, while deeper layers combine these to recognize more complex structures like eyes, faces, or entire objects.

2. Core components of CNN architecture

Understanding the building blocks of a CNN architecture is essential to grasp how these networks process visual information. A typical CNN consists of several layer types, each serving a specific purpose in the feature extraction and classification pipeline.

Convolutional layers

The convolutional layer is the heart of a CNN. This layer applies a set of learnable filters (also called kernels) to the input. Each filter slides across the input image, performing element-wise multiplication and summing the results to produce a feature map.

Mathematically, the convolution operation can be expressed as:

$$S(i,j) = (I * K)(i,j) = \sum_m \sum_n I(m,n) \cdot K(i-m, j-n)$$

Where \(I\) is the input image, \(K\) is the kernel, and \(S\) is the output feature map.

Here’s a simple example of implementing a convolutional layer in Python using NumPy:

import numpy as np

def convolve2d(image, kernel, stride=1, padding=0):

"""

Performs 2D convolution operation

Args:

image: Input image (H x W)

kernel: Convolution kernel (K x K)

stride: Step size for sliding the kernel

padding: Number of pixels to pad around the image

"""

# Add padding to the image

if padding > 0:

image = np.pad(image, padding, mode='constant')

# Get dimensions

image_h, image_w = image.shape

kernel_h, kernel_w = kernel.shape

# Calculate output dimensions

out_h = (image_h - kernel_h) // stride + 1

out_w = (image_w - kernel_w) // stride + 1

# Initialize output feature map

output = np.zeros((out_h, out_w))

# Perform convolution

for i in range(0, out_h):

for j in range(0, out_w):

# Extract region

h_start = i * stride

h_end = h_start + kernel_h

w_start = j * stride

w_end = w_start + kernel_w

region = image[h_start:h_end, w_start:w_end]

# Element-wise multiplication and sum

output[i, j] = np.sum(region * kernel)

return output

# Example usage

image = np.random.rand(28, 28)

edge_detector = np.array([[-1, -1, -1],

[-1, 8, -1],

[-1, -1, -1]])

feature_map = convolve2d(image, edge_detector, stride=1, padding=1)

print(f"Input shape: {image.shape}")

print(f"Output shape: {feature_map.shape}")

Activation functions

After each convolution operation, an activation function introduces non-linearity into the network. Without non-linear activation, stacking multiple layers would be equivalent to a single linear transformation, severely limiting the network’s representational power.

The most commonly used activation function in CNN models is the Rectified Linear Unit (ReLU):

$$f(x) = \max(0, x)$$

ReLU has several advantages: it’s computationally efficient, helps mitigate the vanishing gradient problem, and promotes sparse activation. Here’s a simple implementation:

def relu(x):

"""ReLU activation function"""

return np.maximum(0, x)

def leaky_relu(x, alpha=0.01):

"""Leaky ReLU to address dying ReLU problem"""

return np.where(x > 0, x, alpha * x)

# Apply ReLU to feature map

activated_features = relu(feature_map)

Pooling layers

Pooling layers reduce the spatial dimensions of feature maps, decreasing computational complexity and helping the network become invariant to small translations in the input. The most common types are max pooling and average pooling.

Max pooling selects the maximum value from each region:

def max_pool2d(input_map, pool_size=2, stride=2):

"""

Performs 2D max pooling

Args:

input_map: Input feature map (H x W)

pool_size: Size of pooling window

stride: Step size for sliding the window

"""

h, w = input_map.shape

out_h = (h - pool_size) // stride + 1

out_w = (w - pool_size) // stride + 1

output = np.zeros((out_h, out_w))

for i in range(out_h):

for j in range(out_w):

h_start = i * stride

h_end = h_start + pool_size

w_start = j * stride

w_end = w_start + pool_size

region = input_map[h_start:h_end, w_start:w_end]

output[i, j] = np.max(region)

return output

# Example usage

pooled_features = max_pool2d(activated_features, pool_size=2, stride=2)

print(f"After pooling shape: {pooled_features.shape}")

Fully connected layers

After several convolutional and pooling layers extract high-level features, fully connected layers combine these features for final classification. These layers work like traditional neural networks, connecting every neuron from one layer to every neuron in the next layer.

The output of the final fully connected layer typically passes through a softmax function for multi-class classification:

$$\text{softmax}(z_i) = \frac{e^{z_i}}{\sum_{j=1}^K e^{z_j}}$$

Where \(K\) is the number of classes.

3. Famous CNN architectures

The evolution of CNN architecture has been marked by several landmark models that have progressively pushed the boundaries of what’s possible in computer vision. Understanding these architectures provides insight into design principles that make convolutional neural networks effective.

LeNet

Developed by Yann LeCun and his colleagues, LeNet was one of the earliest successful applications of convolutional neural networks. Originally designed for handwritten digit recognition, LeNet-5 consists of seven layers (not counting input):

- Two sets of convolutional and pooling layers

- Three fully connected layers

- Output layer with 10 neurons (for digits 0-9)

LeNet demonstrated that CNNs could learn useful feature hierarchies directly from raw pixels, setting the foundation for modern CNN deep learning.

import torch

import torch.nn as nn

class LeNet5(nn.Module):

"""LeNet-5 architecture implementation"""

def __init__(self, num_classes=10):

super(LeNet5, self).__init__()

self.conv1 = nn.Conv2d(1, 6, kernel_size=5, stride=1, padding=2)

self.pool1 = nn.AvgPool2d(kernel_size=2, stride=2)

self.conv2 = nn.Conv2d(6, 16, kernel_size=5, stride=1)

self.pool2 = nn.AvgPool2d(kernel_size=2, stride=2)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, num_classes)

def forward(self, x):

x = torch.relu(self.conv1(x))

x = self.pool1(x)

x = torch.relu(self.conv2(x))

x = self.pool2(x)

x = x.view(x.size(0), -1) # Flatten

x = torch.relu(self.fc1(x))

x = torch.relu(self.fc2(x))

x = self.fc3(x)

return x

# Create model instance

model = LeNet5(num_classes=10)

print(model)

AlexNet

AlexNet marked a breakthrough moment in CNN machine learning, winning the ImageNet competition by a significant margin. This architecture introduced several innovations:

- Deeper architecture: Eight learned layers (five convolutional and three fully connected)

- ReLU activation: Used ReLU instead of tanh, enabling faster training

- Dropout: Introduced dropout regularization to prevent overfitting

- Data augmentation: Extensively used data augmentation to increase dataset size

- GPU training: Leveraged GPU computation for faster training

The architecture uses 60 million parameters and demonstrated that deeper networks could capture more complex patterns.

VGG

The VGG architecture, developed by the Visual Geometry Group at Oxford, emphasized network depth while using very small (3×3) convolutional filters. VGG-16 and VGG-19 (with 16 and 19 layers respectively) showed that increasing depth with small filters improved performance.

Key characteristics of VGG include:

- Consistent use of 3×3 convolutions with stride 1

- Max pooling with 2×2 windows

- Doubling of channels after each pooling layer

- Simple and homogeneous architecture

class VGGBlock(nn.Module):

"""VGG building block with repeated 3x3 convolutions"""

def __init__(self, in_channels, out_channels, num_convs):

super(VGGBlock, self).__init__()

layers = []

for i in range(num_convs):

layers.append(nn.Conv2d(

in_channels if i == 0 else out_channels,

out_channels,

kernel_size=3,

padding=1

))

layers.append(nn.ReLU(inplace=True))

layers.append(nn.MaxPool2d(kernel_size=2, stride=2))

self.block = nn.Sequential(*layers)

def forward(self, x):

return self.block(x)

# Example VGG-like architecture

class SimpleVGG(nn.Module):

def __init__(self, num_classes=1000):

super(SimpleVGG, self).__init__()

self.features = nn.Sequential(

VGGBlock(3, 64, 2), # Block 1

VGGBlock(64, 128, 2), # Block 2

VGGBlock(128, 256, 3), # Block 3

VGGBlock(256, 512, 3), # Block 4

)

self.classifier = nn.Sequential(

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(inplace=True),

nn.Dropout(0.5),

nn.Linear(4096, 4096),

nn.ReLU(inplace=True),

nn.Dropout(0.5),

nn.Linear(4096, num_classes)

)

def forward(self, x):

x = self.features(x)

x = x.view(x.size(0), -1)

x = self.classifier(x)

return x

4. How CNNs learn: training process

Understanding how CNNs learn from data is crucial to effectively applying CNN in deep learning projects. The training process involves forward propagation, loss calculation, and backpropagation.

Forward propagation

During forward propagation, input data flows through the network layer by layer. Each convolutional layer applies its filters, activation functions transform the outputs, and pooling layers reduce dimensions. Finally, fully connected layers produce class predictions.

Loss calculation

The network’s predictions are compared to true labels using a loss function. For multi-class classification, cross-entropy loss is standard:

$$L = -\sum_{i=1}^C y_i \log(\hat{y}_i)$$

Where \(y_i\) is the true label (one-hot encoded) and \(\hat{y}_i\) is the predicted probability for class \(i\).

Backpropagation and optimization

Backpropagation computes gradients of the loss with respect to each parameter by applying the chain rule. These gradients indicate how to adjust parameters to minimize loss.

Here’s a complete training example using PyTorch:

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import DataLoader

from torchvision import datasets, transforms

# Define a simple CNN model

class SimpleCNN(nn.Module):

def __init__(self):

super(SimpleCNN, self).__init__()

self.conv_layers = nn.Sequential(

nn.Conv2d(1, 32, kernel_size=3, padding=1),

nn.ReLU(),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, kernel_size=3, padding=1),

nn.ReLU(),

nn.MaxPool2d(2),

)

self.fc_layers = nn.Sequential(

nn.Flatten(),

nn.Linear(64 * 7 * 7, 128),

nn.ReLU(),

nn.Dropout(0.5),

nn.Linear(128, 10)

)

def forward(self, x):

x = self.conv_layers(x)

x = self.fc_layers(x)

return x

# Training function

def train_cnn(model, train_loader, criterion, optimizer, device, num_epochs=10):

"""

Train the CNN model

Args:

model: CNN model

train_loader: DataLoader for training data

criterion: Loss function

optimizer: Optimization algorithm

device: CPU or GPU

num_epochs: Number of training epochs

"""

model.train()

for epoch in range(num_epochs):

running_loss = 0.0

correct = 0

total = 0

for batch_idx, (inputs, targets) in enumerate(train_loader):

inputs, targets = inputs.to(device), targets.to(device)

# Forward pass

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, targets)

# Backward pass and optimization

loss.backward()

optimizer.step()

# Statistics

running_loss += loss.item()

_, predicted = outputs.max(1)

total += targets.size(0)

correct += predicted.eq(targets).sum().item()

if (batch_idx + 1) % 100 == 0:

print(f'Epoch [{epoch+1}/{num_epochs}], '

f'Step [{batch_idx+1}/{len(train_loader)}], '

f'Loss: {running_loss/100:.4f}, '

f'Accuracy: {100.*correct/total:.2f}%')

running_loss = 0.0

# Example setup

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# Prepare data

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])

train_dataset = datasets.MNIST(root='./data', train=True,

download=True, transform=transform)

train_loader = DataLoader(train_dataset, batch_size=128, shuffle=True)

# Initialize model, loss, and optimizer

model = SimpleCNN().to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

# Train the model

# train_cnn(model, train_loader, criterion, optimizer, device, num_epochs=10)

5. Applications of convolutional neural networks

CNNs have transformed numerous fields through their ability to understand visual information. Let’s explore the diverse applications where CNN models excel.

Image classification

The most fundamental application is categorizing images into predefined classes. From medical diagnosis (identifying diseases in X-rays or MRI scans) to quality control in manufacturing (detecting defects in products), image classification powered by CNNs has become ubiquitous.

A practical example is building a custom image classifier:

import torch

import torch.nn as nn

from torchvision import models, transforms

from PIL import Image

class ImageClassifier:

"""Custom image classifier using pre-trained CNN"""

def __init__(self, num_classes, model_name='resnet18'):

# Load pre-trained model

if model_name == 'resnet18':

self.model = models.resnet18(pretrained=True)

num_features = self.model.fc.in_features

self.model.fc = nn.Linear(num_features, num_classes)

self.device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

self.model = self.model.to(self.device)

# Define preprocessing

self.transform = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

def predict(self, image_path, class_names):

"""

Predict class for a single image

Args:

image_path: Path to the image

class_names: List of class names

Returns:

Predicted class and confidence

"""

self.model.eval()

# Load and preprocess image

image = Image.open(image_path).convert('RGB')

image_tensor = self.transform(image).unsqueeze(0).to(self.device)

# Make prediction

with torch.no_grad():

outputs = self.model(image_tensor)

probabilities = torch.softmax(outputs, dim=1)

confidence, predicted_idx = torch.max(probabilities, 1)

predicted_class = class_names[predicted_idx.item()]

confidence_score = confidence.item()

return predicted_class, confidence_score

# Example usage

# classifier = ImageClassifier(num_classes=5)

# class_names = ['cat', 'dog', 'bird', 'fish', 'horse']

# prediction, confidence = classifier.predict('image.jpg', class_names)

# print(f"Predicted: {prediction} (Confidence: {confidence:.2%})")

Object detection

Going beyond classification, object detection identifies and locates multiple objects within an image. Applications include autonomous driving (detecting pedestrians, vehicles, and traffic signs), surveillance systems, and retail analytics.

Modern object detection architectures like YOLO (You Only Look Once) and Faster R-CNN use CNN backbones to extract features, then add specialized layers for bounding box prediction and classification.

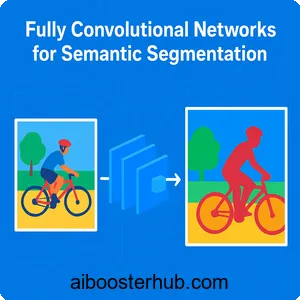

Semantic segmentation

Semantic segmentation assigns a class label to every pixel in an image. This is crucial for applications like medical imaging (segmenting tumors or organs), autonomous driving (understanding road scenes), and satellite imagery analysis (identifying land use patterns).

Facial recognition

CNN technology powers facial recognition systems used in security, authentication, and photo organization. These systems learn to encode facial features into compact representations that can be compared for identity verification.

Natural language processing

While originally designed for images, CNNs have found applications in text processing. They can capture local patterns in text sequences, making them useful for tasks like sentiment analysis, text classification, and even machine translation when combined with other architectures.

class TextCNN(nn.Module):

"""CNN for text classification"""

def __init__(self, vocab_size, embed_dim, num_classes, num_filters=100):

super(TextCNN, self).__init__()

self.embedding = nn.Embedding(vocab_size, embed_dim)

# Multiple kernel sizes to capture different n-grams

self.conv1 = nn.Conv1d(embed_dim, num_filters, kernel_size=3)

self.conv2 = nn.Conv1d(embed_dim, num_filters, kernel_size=4)

self.conv3 = nn.Conv1d(embed_dim, num_filters, kernel_size=5)

self.dropout = nn.Dropout(0.5)

self.fc = nn.Linear(num_filters * 3, num_classes)

def forward(self, x):

# x shape: (batch_size, seq_length)

embedded = self.embedding(x) # (batch_size, seq_length, embed_dim)

embedded = embedded.permute(0, 2, 1) # (batch_size, embed_dim, seq_length)

# Apply convolutions and max pooling

conv1_out = torch.relu(self.conv1(embedded))

conv2_out = torch.relu(self.conv2(embedded))

conv3_out = torch.relu(self.conv3(embedded))

pool1 = torch.max(conv1_out, dim=2)[0]

pool2 = torch.max(conv2_out, dim=2)[0]

pool3 = torch.max(conv3_out, dim=2)[0]

# Concatenate pooled features

concatenated = torch.cat([pool1, pool2, pool3], dim=1)

concatenated = self.dropout(concatenated)

output = self.fc(concatenated)

return output

6. Advanced techniques and best practices

Mastering CNN neural network development requires understanding advanced techniques that improve performance and training efficiency.

Transfer learning

Transfer learning leverages pre-trained CNN models on large datasets (like ImageNet) and fine-tunes them for specific tasks. This approach dramatically reduces training time and data requirements.

def create_transfer_learning_model(num_classes, freeze_features=True):

"""

Create a transfer learning model using pre-trained ResNet

Args:

num_classes: Number of target classes

freeze_features: Whether to freeze pre-trained layers

Returns:

Model ready for fine-tuning

"""

# Load pre-trained model

model = models.resnet50(pretrained=True)

# Freeze convolutional layers if specified

if freeze_features:

for param in model.parameters():

param.requires_grad = False

# Replace final layer

num_features = model.fc.in_features

model.fc = nn.Sequential(

nn.Linear(num_features, 512),

nn.ReLU(),

nn.Dropout(0.3),

nn.Linear(512, num_classes)

)

return model

# Fine-tuning strategy

def fine_tune_model(model, train_loader, val_loader, num_epochs=20):

"""Two-stage fine-tuning approach"""

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = model.to(device)

# Stage 1: Train only the new layers

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.fc.parameters(), lr=0.001)

print("Stage 1: Training new layers...")

# train_cnn(model, train_loader, criterion, optimizer, device, num_epochs=10)

# Stage 2: Unfreeze all layers and fine-tune with lower learning rate

for param in model.parameters():

param.requires_grad = True

optimizer = optim.Adam(model.parameters(), lr=0.0001)

print("Stage 2: Fine-tuning entire model...")

# train_cnn(model, train_loader, criterion, optimizer, device, num_epochs=10)

return model

Data augmentation

Data augmentation artificially expands the training dataset by applying transformations like rotation, flipping, scaling, and color adjustments. This helps the model generalize better and reduces overfitting.

# Comprehensive data augmentation pipeline

train_transform = transforms.Compose([

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.RandomRotation(15),

transforms.ColorJitter(brightness=0.2, contrast=0.2,

saturation=0.2, hue=0.1),

transforms.RandomAffine(degrees=0, translate=(0.1, 0.1)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

# Validation transform (no augmentation)

val_transform = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

Batch normalization

Batch normalization normalizes layer inputs across each mini-batch, stabilizing and accelerating training. It reduces internal covariate shift and allows higher learning rates.

$$\hat{x} = \frac{x – \mu_B}{\sqrt{\sigma_B^2 + \epsilon}}$$

Where \(\mu_B\) and \(\sigma_B^2\) are the mean and variance of the mini-batch.

Regularization techniques

Preventing overfitting is crucial in CNN deep learning. Common regularization methods include:

- Dropout: Randomly deactivates neurons during training

- L2 regularization (weight decay): Penalizes large weights

- Early stopping: Halts training when validation performance stops improving

class RegularizedCNN(nn.Module):

"""CNN with multiple regularization techniques"""

def __init__(self, num_classes, dropout_rate=0.5):

super(RegularizedCNN, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 64, 3, padding=1),

nn.BatchNorm2d(64), # Batch normalization

nn.ReLU(inplace=True),

nn.MaxPool2d(2),

nn.Dropout2d(0.25), # Spatial dropout

nn.Conv2d(64, 128, 3, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(inplace=True),

nn.MaxPool2d(2),

nn.Dropout2d(0.25),

)

self.classifier = nn.Sequential(

nn.Linear(128 * 7 * 7, 512),

nn.BatchNorm1d(512),

nn.ReLU(inplace=True),

nn.Dropout(dropout_rate),

nn.Linear(512, num_classes)

)

def forward(self, x):

x = self.features(x)

x = x.view(x.size(0), -1)

x = self.classifier(x)

return x

# Training with L2 regularization (weight decay)

model = RegularizedCNN(num_classes=10)

optimizer = optim.Adam(model.parameters(), lr=0.001, weight_decay=1e-4)

Learning rate scheduling

Adjusting the learning rate during training can significantly improve convergence. Common strategies include step decay, exponential decay, and cosine annealing.

from torch.optim.lr_scheduler import ReduceLROnPlateau, CosineAnnealingLR

# Reduce learning rate when validation loss plateaus

scheduler = ReduceLROnPlateau(optimizer, mode='min', factor=0.5,

patience=5, verbose=True)

# Cosine annealing schedule

# scheduler = CosineAnnealingLR(optimizer, T_max=50, eta_min=1e-6)

# In training loop:

# for epoch in range(num_epochs):

# train_loss = train_one_epoch(...)

# val_loss = validate(...)

# scheduler.step(val_loss) # For ReduceLROnPlateau

7. Conclusion

Convolutional neural networks represent one of the most significant breakthroughs in artificial intelligence, fundamentally changing how machines perceive and interpret visual information. From the foundational concepts of convolution and pooling to sophisticated architectures like AlexNet, VGG, and beyond, CNNs have proven their versatility across countless applications. Whether you’re building an image classification system, developing object detection solutions, or exploring creative applications in various domains, understanding CNN architecture and implementation techniques is essential for any AI practitioner.

As you embark on your journey with CNN machine learning, remember that mastery comes through experimentation and practice. Start with simple architectures, leverage transfer learning when appropriate, and gradually explore more complex models as your understanding deepens. The field of CNN in deep learning continues to evolve rapidly, with new architectures and techniques emerging regularly. By building a strong foundation in the principles covered in this guide, you’ll be well-equipped to adapt to these advancements and create powerful AI solutions that push the boundaries of what’s possible with computer vision.