Deep learning for traffic forecasting: Graph and RNN approaches

Traffic congestion has become one of the most pressing challenges in modern urban environments, costing billions in lost productivity and environmental damage. Traditional statistical methods for traffic forecasting often struggle to capture the complex spatial and temporal dependencies inherent in traffic networks. Enter deep learning—a revolutionary approach that combines the power of graph neural networks and recurrent neural networks to predict traffic conditions with unprecedented accuracy.

This article explores how cutting-edge techniques like the diffusion convolutional recurrent neural network are transforming data-driven traffic forecasting and enabling smarter cities.

Content

Toggle1. Understanding the traffic forecasting challenge

Traffic forecasting involves predicting future traffic conditions based on historical and real-time data collected from various sensors across road networks. Unlike simple time series prediction, traffic forecasting must account for both temporal patterns (how traffic evolves over time) and spatial dependencies (how traffic at one location affects nearby locations).

The complexity of spatial-temporal data

Traffic networks exhibit unique characteristics that make forecasting particularly challenging:

- Spatial dependencies: Traffic conditions at one intersection are influenced by upstream and downstream locations. A bottleneck at one point can cascade through the network, affecting multiple roads.

- Temporal dynamics: Traffic patterns vary throughout the day, week, and season, with rush hours, weekends, and holidays showing distinct behaviors.

- Non-Euclidean structure: Unlike images or regular grids, road networks form irregular graph structures where traditional convolutional neural networks cannot be directly applied.

Consider a highway system where an accident on the main route causes ripple effects on alternative routes miles away. This spatial-temporal interaction requires models that can capture both the network topology and temporal evolution simultaneously.

Traditional approaches and their limitations

Classical methods like ARIMA (AutoRegressive Integrated Moving Average) and Kalman filters have been the workhorses of traffic forecasting for decades. These statistical models work well for simple scenarios but struggle with:

- Non-linear relationships in traffic flow

- Complex interactions between multiple road segments

- Sudden disruptions like accidents or special events

- Large-scale networks with thousands of sensors

Machine learning methods like support vector regression improved upon statistical approaches but still treated each location independently, missing crucial spatial correlations. The breakthrough came with deep learning, which could automatically learn hierarchical representations of spatial-temporal patterns.

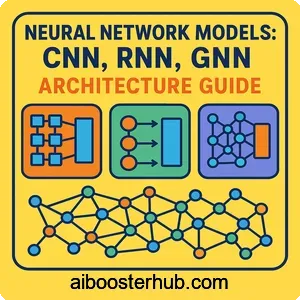

2. Graph neural networks for spatial modeling

Graph neural networks have emerged as a powerful framework for processing data on irregular structures like traffic networks. Unlike traditional neural networks that operate on fixed-size grids, GNNs can handle the variable topology of road networks naturally.

Representing traffic networks as graphs

In traffic forecasting, we model the road network as a graph \( G = (V, E) \) where:

- Vertices \( V \) represent sensors or road segments

- Edges \( E \) represent connections between locations (physical roads or proximity relationships)

- Each node \( v_i \) has feature vectors representing traffic measurements (speed, flow, occupancy)

The adjacency matrix ( A ) encodes the network structure, where \( A_{ij} = 1 \) if locations \( i \) and \( j \) are connected. This matrix can be weighted to reflect distance, travel time, or correlation strength between locations.

Graph convolution operations

The key innovation in GNNs is the graph convolution operation, which aggregates information from neighboring nodes similar to how CNNs aggregate information from nearby pixels. For a node ( i ), the convolution can be expressed as:

$$ h_i^{(l+1)} = \sigma\left(\sum_{j \in N(i)} \frac{1}{c_{ij}} W^{(l)} h_j^{(l)}\right) $$

where \( h_i^{(l)} \) is the hidden state of node \( i \) at layer \( l \), \( N(i) \) represents the neighbors of node \( i \), \( c_{ij} \) is a normalization constant, \( W^{(l)} \) is a learnable weight matrix, and \( \sigma \) is an activation function.

Spectral graph convolutions

Spectral approaches leverage graph signal processing theory to define convolutions in the spectral domain using the graph Laplacian. The graph Laplacian is defined as \( L = D – A \), where ( D ) is the degree matrix. Spectral convolutions can be expressed as:

$$ g_\theta \star x = U g_\theta U^T x $$

where \( U \) is the matrix of eigenvectors of \( L \), and \( g_\theta \) is a learnable filter in the spectral domain.

For traffic networks, spectral methods can capture global patterns but are computationally expensive for large graphs. This led to the development of localized approximations like Chebyshev polynomials.

Diffusion convolution

The diffusion convolutional layer, a key component in the diffusion convolutional recurrent neural network, uses a different approach inspired by diffusion processes. It models how information spreads through the graph over multiple hops:

$$ H = \sum_{k=0}^{K} P^k X W_k $$

where \( P \) is the transition matrix (often derived from the adjacency matrix), \( X \) is the input signal, \( K \) is the diffusion steps, and \( W_k \) are learnable parameters. This captures how traffic conditions diffuse through the network, similar to how congestion propagates from one road segment to neighboring segments.

3. Recurrent neural networks for temporal modeling

While graph neural networks excel at capturing spatial dependencies, recurrent neural networks are the go-to architecture for modeling temporal sequences. In traffic forecasting, we need to capture how traffic evolves over time, including short-term fluctuations and longer-term trends.

The power of recurrence

Recurrent neural networks maintain a hidden state that gets updated at each time step, allowing them to remember past information. The basic RNN update is:

$$ h_t = \sigma(W_{hh} h_{t-1} + W_{xh} x_t + b) $$

where \( h_t \) is the hidden state at time \( t \), \( x_t \) is the input, and \( W_{hh}, W_{xh} \) are weight matrices.

However, basic RNNs suffer from vanishing gradients when dealing with long sequences, making it difficult to capture long-term dependencies like weekly patterns in traffic.

LSTM and GRU cells

Long Short-Term Memory networks and Gated Recurrent Units address the vanishing gradient problem through gating mechanisms that control information flow. An LSTM cell maintains a cell state \( c_t \) and uses three gates:

- Forget gate: decides what information to discard from the cell state

- Input gate: decides what new information to store

- Output gate: decides what to output based on the cell state

The LSTM equations are:

$$ \begin{align}

f_t &= \sigma\big(W_f \cdot [h_{t-1}, x_t] + b_f\big) \\

i_t &= \sigma\big(W_i \cdot [h_{t-1}, x_t] + b_i\big) \\

\tilde{c}_t &= \tanh\big(W_c \cdot [h_{t-1}, x_t] + b_c\big) \\

c_t &= f_t \odot c_{t-1} + i_t \odot \tilde{c}_t \\

o_t &= \sigma\big(W_o \cdot [h_{t-1}, x_t] + b_o\big) \\

h_t &= o_t \odot \tanh(c_t)

\end{align}$$

where \( \odot \) denotes element-wise multiplication.

Sequence-to-sequence architectures

For traffic forecasting, we typically use an encoder-decoder architecture:

- Encoder: processes historical traffic data (e.g., past hour) and compresses it into a context vector

- Decoder: generates future predictions (e.g., next 30 minutes) based on the context

This sequence-to-sequence approach allows the model to learn complex temporal patterns and make multi-step ahead predictions.

4. Diffusion convolutional recurrent neural network

The diffusion convolutional recurrent neural network represents a breakthrough in data-driven traffic forecasting by seamlessly integrating spatial and temporal modeling. This architecture combines diffusion convolution for capturing spatial dependencies with recurrent units for temporal dynamics.

Architecture overview

The DCRNN replaces the matrix multiplications in recurrent cells with diffusion convolution operations. Instead of simple linear transformations, each gate in the recurrent cell performs graph convolutions that aggregate information from neighboring nodes.

For a GRU-based DCRNN, the update equations become:

$$ \begin{align} r_t &= \sigma(\Theta_r \star_G [X_t, H_{t-1}] + b_r) \ u_t &= \sigma(\Theta_u \star_G [X_t, H_{t-1}] + b_u) \ C_t &= \tanh(\Theta_c \star_G [X_t, (r_t \odot H_{t-1})] + b_c) \ H_t &= u_t \odot H_{t-1} + (1 – u_t) \odot C_t \end{align} $$

where \( \star_G \) denotes the diffusion convolution operation, \( X_t \) is the input at time \( t \), \( H_t \) is the hidden state, and \( r_t, u_t \) are the reset and update gates respectively.

Bidirectional diffusion

A key innovation in DCRNN is bidirectional diffusion, which captures both forward and backward information propagation in the graph. Traffic flow can propagate both downstream (following traffic direction) and upstream (congestion backing up), so the model uses two separate diffusion processes:

$$ H = \sum_{k=0}^{K} (P_f^k X W_{k,f} + P_b^k X W_{k,b}) $$

where \( P_f \) is the forward transition matrix and \( P_b \) is the backward transition matrix. This allows the model to capture how traffic conditions spread in both directions through the network.

Scheduled sampling for training

Training sequence-to-sequence models can be challenging due to exposure bias—the model only sees ground truth during training but must use its own predictions during inference. DCRNN employs scheduled sampling, gradually transitioning from using ground truth to using model predictions during training:

import numpy as np

import torch

import torch.nn as nn

class DCRNN(nn.Module):

def __init__(self, num_nodes, input_dim, hidden_dim, output_dim, num_layers, max_diffusion_step):

super(DCRNN, self).__init__()

self.num_nodes = num_nodes

self.hidden_dim = hidden_dim

self.num_layers = num_layers

self.max_diffusion_step = max_diffusion_step

# Encoder layers

self.encoder_cells = nn.ModuleList([

DCGRUCell(num_nodes, input_dim if i == 0 else hidden_dim,

hidden_dim, max_diffusion_step)

for i in range(num_layers)

])

# Decoder layers

self.decoder_cells = nn.ModuleList([

DCGRUCell(num_nodes, output_dim if i == 0 else hidden_dim,

hidden_dim, max_diffusion_step)

for i in range(num_layers)

])

# Output projection

self.projection = nn.Linear(hidden_dim, output_dim)

def forward(self, inputs, adj_matrix, targets=None, teacher_forcing_ratio=0.5):

"""

Args:

inputs: (batch_size, seq_len, num_nodes, input_dim)

adj_matrix: (num_nodes, num_nodes)

targets: (batch_size, horizon, num_nodes, output_dim)

teacher_forcing_ratio: probability of using ground truth

"""

batch_size, seq_len, num_nodes, _ = inputs.shape

# Encoding

encoder_hidden_states = [None] * self.num_layers

for t in range(seq_len):

for layer in range(self.num_layers):

inputs_layer = inputs[:, t] if layer == 0 else encoder_hidden_states[layer-1]

encoder_hidden_states[layer] = self.encoder_cells[layer](

inputs_layer, encoder_hidden_states[layer], adj_matrix

)

# Decoding with scheduled sampling

outputs = []

decoder_hidden_states = encoder_hidden_states

decoder_input = inputs[:, -1, :, :1] # Use last input as first decoder input

horizon = targets.shape[1] if targets is not None else 12

for t in range(horizon):

for layer in range(self.num_layers):

inputs_layer = decoder_input if layer == 0 else decoder_hidden_states[layer-1]

decoder_hidden_states[layer] = self.decoder_cells[layer](

inputs_layer, decoder_hidden_states[layer], adj_matrix

)

# Project to output

output = self.projection(decoder_hidden_states[-1])

outputs.append(output)

# Scheduled sampling

if targets is not None and np.random.random() < teacher_forcing_ratio:

decoder_input = targets[:, t]

else:

decoder_input = output

return torch.stack(outputs, dim=1)

class DCGRUCell(nn.Module):

def __init__(self, num_nodes, input_dim, hidden_dim, max_diffusion_step):

super(DCGRUCell, self).__init__()

self.num_nodes = num_nodes

self.hidden_dim = hidden_dim

self.max_diffusion_step = max_diffusion_step

# Parameters for reset and update gates

self.graph_conv_gate = DiffusionGraphConv(

input_dim + hidden_dim, hidden_dim * 2, max_diffusion_step

)

# Parameters for candidate activation

self.graph_conv_candidate = DiffusionGraphConv(

input_dim + hidden_dim, hidden_dim, max_diffusion_step

)

def forward(self, inputs, hidden_state, adj_matrix):

if hidden_state is None:

hidden_state = torch.zeros(

inputs.shape[0], self.num_nodes, self.hidden_dim

).to(inputs.device)

# Concatenate input and hidden state

combined = torch.cat([inputs, hidden_state], dim=-1)

# Compute reset and update gates

combined_conv = self.graph_conv_gate(combined, adj_matrix)

r, u = torch.split(combined_conv, self.hidden_dim, dim=-1)

r = torch.sigmoid(r)

u = torch.sigmoid(u)

# Compute candidate activation

combined_candidate = torch.cat([inputs, r * hidden_state], dim=-1)

c = torch.tanh(self.graph_conv_candidate(combined_candidate, adj_matrix))

# Compute new hidden state

new_hidden_state = u * hidden_state + (1 - u) * c

return new_hidden_state

class DiffusionGraphConv(nn.Module):

def __init__(self, input_dim, output_dim, max_diffusion_step):

super(DiffusionGraphConv, self).__init__()

self.max_diffusion_step = max_diffusion_step

# Weights for forward and backward diffusion

self.weight_forward = nn.Parameter(

torch.FloatTensor(input_dim * (max_diffusion_step + 1), output_dim)

)

self.weight_backward = nn.Parameter(

torch.FloatTensor(input_dim * (max_diffusion_step + 1), output_dim)

)

self.bias = nn.Parameter(torch.FloatTensor(output_dim))

self.reset_parameters()

def reset_parameters(self):

nn.init.xavier_uniform_(self.weight_forward)

nn.init.xavier_uniform_(self.weight_backward)

nn.init.zeros_(self.bias)

def forward(self, inputs, adj_matrix):

batch_size, num_nodes, input_dim = inputs.shape

# Compute transition matrices

adj_forward = adj_matrix / (adj_matrix.sum(dim=1, keepdim=True) + 1e-6)

adj_backward = adj_matrix.t() / (adj_matrix.sum(dim=0, keepdim=True) + 1e-6)

# Diffusion process

supports_forward = [inputs]

supports_backward = [inputs]

for k in range(self.max_diffusion_step):

supports_forward.append(

torch.matmul(adj_forward, supports_forward[-1])

)

supports_backward.append(

torch.matmul(adj_backward, supports_backward[-1])

)

# Concatenate all diffusion steps

x_forward = torch.cat(supports_forward, dim=-1)

x_backward = torch.cat(supports_backward, dim=-1)

# Apply weights

output_forward = torch.matmul(x_forward, self.weight_forward)

output_backward = torch.matmul(x_backward, self.weight_backward)

return output_forward + output_backward + self.bias

This implementation demonstrates the core components of DCRNN, including the diffusion graph convolution and the recurrent cell structure that integrates spatial and temporal processing.

5. Spatio-temporal graph convolutional networks

Building on similar principles, spatio-temporal graph convolutional networks offer another powerful framework for traffic forecasting. The key difference from DCRNN is that STGCN uses purely convolutional operations for both spatial and temporal dimensions, avoiding recurrence entirely.

Temporal gated convolution

Instead of RNNs, STGCN employs temporal gated convolutions inspired by the gated linear units used in sequence modeling. For a temporal sequence of graph signals, the temporal convolution applies 1D convolutions along the time axis:

$$ \Gamma = P \odot \sigma(Q) $$

where \( P \) and \( Q \) are obtained by convolving the input with different filters, and \( \sigma\) is typically a sigmoid function. This gating mechanism allows the model to control information flow without the computational overhead of recurrence.

ST-Conv blocks

The building block of STGCN is the ST-Conv block, which sandwiches a spatial graph convolution between two temporal convolutions:

- Temporal convolution layer: captures temporal dependencies

- Spatial graph convolution layer: captures spatial dependencies

- Temporal convolution layer: further processes temporal information

This “sandwich” structure effectively captures spatio-temporal correlations. The output of an ST-Conv block for a graph signal \( X \in \mathbb{R}^{N \times T \times C} \) (N nodes, T time steps, C channels) can be expressed as:

$$ H^{(l)} = \text{TConv}_2(\text{GConv}(\text{TConv}_1(H^{(l-1)}))) $$

Advantages over recurrent approaches

STGCN offers several benefits compared to recurrent architectures:

- Parallel computation: All temporal convolutions can be computed in parallel, unlike RNNs which must process sequentially

- Stable training: No vanishing or exploding gradient problems associated with long sequences

- Faster inference: No need to maintain and update hidden states

- Flexible receptive field: Stacking multiple ST-Conv blocks increases the receptive field in both space and time

For real-time traffic forecasting applications where latency is critical, STGCN’s computational efficiency makes it particularly attractive.

Implementation example

Here’s a simplified implementation of an STGCN layer:

import torch

import torch.nn as nn

import torch.nn.functional as F

class TemporalConvLayer(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size=3):

super(TemporalConvLayer, self).__init__()

self.conv = nn.Conv2d(in_channels, out_channels,

kernel_size=(1, kernel_size),

padding=(0, (kernel_size - 1) // 2))

self.gate_conv = nn.Conv2d(in_channels, out_channels,

kernel_size=(1, kernel_size),

padding=(0, (kernel_size - 1) // 2))

def forward(self, x):

# x shape: (batch, channels, nodes, time)

P = self.conv(x)

Q = self.gate_conv(x)

return P * torch.sigmoid(Q)

class SpatialGraphConvLayer(nn.Module):

def __init__(self, in_channels, out_channels, num_nodes):

super(SpatialGraphConvLayer, self).__init__()

self.theta = nn.Parameter(torch.FloatTensor(in_channels, out_channels))

nn.init.xavier_uniform_(self.theta)

def forward(self, x, adj_matrix):

# x shape: (batch, channels, nodes, time)

batch_size, in_channels, num_nodes, time_steps = x.shape

# Reshape for matrix multiplication

x_reshaped = x.permute(0, 3, 2, 1).reshape(-1, num_nodes, in_channels)

# Graph convolution: L_norm @ X @ Theta

# Using normalized Laplacian

degree_matrix = torch.sum(adj_matrix, dim=1)

D_sqrt_inv = torch.diag(torch.pow(degree_matrix, -0.5))

L_norm = torch.matmul(torch.matmul(D_sqrt_inv, adj_matrix), D_sqrt_inv)

# Apply graph convolution

output = torch.matmul(torch.matmul(L_norm, x_reshaped), self.theta)

# Reshape back

output = output.reshape(batch_size, time_steps, num_nodes, -1)

output = output.permute(0, 3, 2, 1)

return output

class STConvBlock(nn.Module):

def __init__(self, in_channels, out_channels, num_nodes, kernel_size=3):

super(STConvBlock, self).__init__()

self.temporal1 = TemporalConvLayer(in_channels, out_channels, kernel_size)

self.spatial = SpatialGraphConvLayer(out_channels, out_channels, num_nodes)

self.temporal2 = TemporalConvLayer(out_channels, out_channels, kernel_size)

self.batch_norm = nn.BatchNorm2d(out_channels)

def forward(self, x, adj_matrix):

# x shape: (batch, channels, nodes, time)

t1 = self.temporal1(x)

s = self.spatial(t1, adj_matrix)

t2 = self.temporal2(s)

out = self.batch_norm(t2)

return F.relu(out)

class STGCN(nn.Module):

def __init__(self, num_nodes, in_channels, hidden_channels, out_channels,

num_blocks=2, kernel_size=3):

super(STGCN, self).__init__()

self.st_blocks = nn.ModuleList([

STConvBlock(in_channels if i == 0 else hidden_channels,

hidden_channels, num_nodes, kernel_size)

for i in range(num_blocks)

])

self.output_layer = nn.Conv2d(hidden_channels, out_channels,

kernel_size=(1, 1))

def forward(self, x, adj_matrix):

# x shape: (batch, channels, nodes, time)

for block in self.st_blocks:

x = block(x, adj_matrix)

# Output projection

out = self.output_layer(x)

return out

# Example usage

num_nodes = 207

input_dim = 1 # e.g., traffic speed

hidden_dim = 64

output_dim = 1

sequence_length = 12

model = STGCN(num_nodes, input_dim, hidden_dim, output_dim, num_blocks=2)

adj = torch.rand(num_nodes, num_nodes) # Placeholder adjacency matrix

x = torch.randn(32, input_dim, num_nodes, sequence_length) # Batch of sequences

output = model(x, adj)

print(f"Output shape: {output.shape}")

6. Practical considerations and applications

Implementing deep learning models for traffic forecasting involves several practical considerations beyond just model architecture. Success in real-world deployments requires attention to data preprocessing, graph construction, training strategies, and evaluation metrics.

Data preprocessing and feature engineering

Traffic data often comes from loop detectors, GPS traces, or traffic cameras, each with unique characteristics and noise patterns. Essential preprocessing steps include:

- Missing value imputation: Sensors frequently fail or report erroneous readings. Common strategies include linear interpolation, seasonal decomposition, or using neighboring sensors to fill gaps.

- Normalization: Traffic speeds and flows vary significantly across different road types. Z-score normalization per sensor ensures stable training: \( x_{norm} = \frac{x – \mu}{\sigma} \)

- Temporal features: Adding time-of-day, day-of-week, and holiday indicators as additional input features helps capture periodic patterns

- Weather integration: External factors like rain, snow, or special events significantly impact traffic and can be incorporated as auxiliary features

Graph construction strategies

The choice of graph structure significantly impacts model performance. Several approaches exist:

- Distance-based: Connect nodes within a threshold distance (e.g., 10 km radius)

- Road network connectivity: Use actual road connections from OpenStreetMap or similar sources

- Correlation-based: Compute pairwise correlations from historical data and threshold to create edges

- Adaptive graphs: Learn the graph structure jointly with the forecasting task

For large networks with thousands of nodes, sparse graphs are essential for computational efficiency. Adaptive thresholding or k-nearest neighbors approaches help maintain sparsity while preserving important connections.

Training strategies

Training deep spatial-temporal models requires careful tuning:

- Loss functions: Mean Absolute Error (MAE) is commonly used as it’s robust to outliers, but weighted losses can emphasize certain time periods or locations

- Curriculum learning: Start with short prediction horizons and gradually increase to longer horizons

- Multi-task learning: Training to predict multiple outputs (speed, flow, occupancy) simultaneously can improve generalization

- Data augmentation: Temporal shifting, adding noise, or creating synthetic scenarios helps prevent overfitting

Evaluation metrics

Traffic forecasting models are typically evaluated using:

- Mean Absolute Error (MAE): \( \frac{1}{n}\sum_{i=1}^{n}|y_i – \hat{y}_i| \)

- Root Mean Square Error (RMSE): \( \sqrt{\frac{1}{n}\sum_{i=1}^{n}(y_i – \hat{y}_i)^2} \)

- Mean Absolute Percentage Error (MAPE): \( \frac{100%}{n}\sum_{i=1}^{n}\left|\frac{y_i – \hat{y}_i}{y_i}\right| \)

It’s important to report metrics across different prediction horizons (e.g., 15, 30, 60 minutes ahead) since accuracy typically degrades for longer horizons.

Real-world applications

Deep learning traffic forecasting has been successfully deployed in various scenarios:

- Intelligent transportation systems: Real-time routing and signal control based on predicted traffic conditions

- Navigation apps: Companies like Google Maps and Waze use these techniques to provide accurate travel time estimates

- City planning: Urban planners use traffic forecasts to evaluate infrastructure projects and policy changes

- Ride-sharing optimization: Platforms like Uber and Lyft predict demand and supply to optimize driver positioning

One compelling case involves a major metropolitan area that deployed DCRNN-based forecasting to manage highway traffic during peak hours. By predicting congestion 30 minutes in advance, traffic management systems could proactively adjust ramp metering rates, reducing average travel times by 12% during rush hour.

7. Conclusion

The fusion of graph neural networks and recurrent neural networks has revolutionized traffic forecasting, enabling accurate predictions that account for complex spatial-temporal dependencies. The diffusion convolutional recurrent neural network and spatio-temporal graph convolutional networks represent significant advances in data-driven traffic forecasting, offering powerful tools for understanding and managing urban mobility.

These deep learning approaches continue to evolve, with ongoing research exploring attention mechanisms, transfer learning across cities, and integration with reinforcement learning for traffic control. As cities grow smarter and sensor networks become more pervasive, the importance of accurate traffic forecasting will only increase, making these techniques essential tools for building sustainable, efficient urban transportation systems.