Encoder Decoder Architecture: Complete Guide to Transformers

Understanding the encoder decoder architecture is fundamental to grasping how modern AI systems process and generate language. From machine translation to text summarization, this architectural pattern powers some of the most impressive natural language processing applications we use daily. Whether you’re wondering “what is an encoder?” or seeking to understand how transformer encoders work, this comprehensive guide will walk you through everything you need to know about encoders, decoders, and their collaborative relationship.

Content

Toggle1. What is an encoder and decoder?

Encoder meaning and core concepts

An encoder is a neural network component that transforms input data into a compressed, meaningful representation called an embedding or latent representation. Think of an encoder as a translator that converts raw information—like text, images, or audio—into a dense mathematical format that captures the essential features and patterns of the input.

The encoder meaning extends beyond simple compression. It learns to extract semantic information, contextual relationships, and hierarchical features from the input. For instance, a text encoder doesn’t just convert words to numbers; it understands that “king” and “queen” are related concepts, or that “running” and “ran” share the same root meaning.

Here’s a simple example of how an encoder processes text:

import torch

import torch.nn as nn

class SimpleEncoder(nn.Module):

def __init__(self, vocab_size, embedding_dim, hidden_dim):

super(SimpleEncoder, self).__init__()

self.embedding = nn.Embedding(vocab_size, embedding_dim)

self.lstm = nn.LSTM(embedding_dim, hidden_dim, batch_first=True)

def forward(self, input_sequence):

# Convert tokens to embeddings

embedded = self.embedding(input_sequence)

# Process through LSTM to get encoded representation

output, (hidden, cell) = self.lstm(embedded)

# Return the final hidden state as the encoded representation

return hidden

# Example usage

vocab_size = 10000

encoder = SimpleEncoder(vocab_size=vocab_size, embedding_dim=256, hidden_dim=512)

input_tokens = torch.randint(0, vocab_size, (1, 10)) # Batch of 1, sequence length 10

encoded_representation = encoder(input_tokens)

print(f"Encoded shape: {encoded_representation.shape}") # Output: [1, 1, 512]

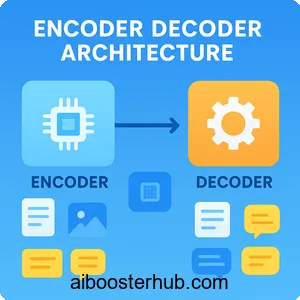

The decoder’s role

A decoder performs the inverse operation of an encoder. It takes the compressed representation produced by an encoder and generates output sequences—whether that’s translated text, a summary, or a response to a query. The decoder meaning emphasizes generation and reconstruction rather than compression.

Decoders are autoregressive, meaning they generate output one token at a time, using previously generated tokens as context. This sequential generation allows decoders to maintain coherence and follow grammatical rules naturally.

Why use encoder decoder architecture?

The encoder decoder architecture separates the understanding phase from the generation phase, providing several advantages:

- Flexibility in input/output lengths: The input sequence and output sequence can have different lengths, essential for tasks like translation where sentences vary across languages.

- Better representation learning: The encoder can focus solely on understanding input, while the decoder specializes in generation.

- Attention mechanisms: The architecture enables sophisticated attention patterns where the decoder can selectively focus on relevant parts of the encoded input.

2. Understanding encoder decoder architecture

Sequence to sequence foundations

The encoder decoder architecture emerged from sequence to sequence (seq2seq) models, originally designed for machine translation. In a basic seq2seq model, the encoder processes the entire input sequence and compresses it into a fixed-size context vector. The decoder then uses this context vector to generate the output sequence.

The mathematical formulation of this process involves:

For the encoder, at each time step (t), we compute:

$$ h_t = f_{enc}(x_t, h_{t-1}) $$

where \(x_t\) is the input at time \(t\), \(h_{t-1}\) is the previous hidden state, and \(f_{enc}\) is the encoder function (typically an LSTM or GRU cell).

The final context vector (c) is derived from the encoder states:

$$ c = q({h_1, h_2, …, h_T}) $$

For the decoder, at each generation step \(t’\):

$$ s_{t’} = f_{dec}(y_{t’-1}, s_{t’-1}, c) $$

$$ P(y_{t’} | y_1, …, y_{t’-1}, x) = g(s_{t’}, y_{t’-1}, c) $$

where \(s_{t’}\) is the decoder hidden state, \(y_{t’-1}\) is the previously generated token, and \(g\) is the output function.

The attention mechanism revolution

Early encoder decoder models suffered from a bottleneck problem: compressing an entire input sequence into a single fixed-size vector lost important information. The attention mechanism solved this by allowing the decoder to “attend” to different parts of the input at each generation step.

The attention mechanism computes a weighted sum of encoder states:

$$ c_{t’} = \sum_{i=1}^{T} \alpha_{t’,i} h_i $$

where the attention weights \(\alpha_{t’,i}\) are computed as:

$$ \alpha_{t’,i} = \frac{\exp(e_{t’,i})}{\sum_{k=1}^{T} \exp(e_{t’,k})} $$

$$ e_{t’,i} = a(s_{t’-1}, h_i) $$

Here, \(a\) is an alignment model (often a small neural network) that scores how well the decoder state \(s_{t’-1}\) matches with encoder state \(h_i\).

Here’s an implementation of basic attention:

import torch

import torch.nn as nn

import torch.nn.functional as F

class AttentionMechanism(nn.Module):

def __init__(self, hidden_dim):

super(AttentionMechanism, self).__init__()

self.attention = nn.Linear(hidden_dim * 2, hidden_dim)

self.v = nn.Linear(hidden_dim, 1, bias=False)

def forward(self, decoder_hidden, encoder_outputs):

# decoder_hidden: [batch, hidden_dim]

# encoder_outputs: [batch, seq_len, hidden_dim]

batch_size = encoder_outputs.size(0)

seq_len = encoder_outputs.size(1)

# Repeat decoder hidden state for each encoder output

decoder_hidden = decoder_hidden.unsqueeze(1).repeat(1, seq_len, 1)

# Concatenate and compute energy

energy = torch.tanh(self.attention(

torch.cat((decoder_hidden, encoder_outputs), dim=2)

))

# Get attention weights

attention_weights = F.softmax(self.v(energy).squeeze(2), dim=1)

# Compute context vector

context = torch.bmm(attention_weights.unsqueeze(1), encoder_outputs)

return context.squeeze(1), attention_weights

# Example usage

hidden_dim = 512

attention = AttentionMechanism(hidden_dim)

decoder_hidden = torch.randn(2, hidden_dim) # Batch size 2

encoder_outputs = torch.randn(2, 10, hidden_dim) # Sequence length 10

context, weights = attention(decoder_hidden, encoder_outputs)

print(f"Context shape: {context.shape}") # [2, 512]

print(f"Attention weights shape: {weights.shape}") # [2, 10]

Modern encoder decoder implementations

Modern encoder decoder architectures have evolved significantly beyond simple RNN-based models. Today’s implementations often use:

- Transformer encoder blocks: Stack multiple self-attention and feed-forward layers

- Positional encodings: Add position information since transformers don’t inherently understand sequence order

- Layer normalization: Stabilize training and improve convergence

- Residual connections: Allow gradients to flow more easily through deep networks

3. Transformer encoder architecture

Self-attention in transformer encoders

The transformer encoder revolutionized natural language processing by replacing recurrent connections with self-attention mechanisms. Unlike RNNs that process sequences sequentially, transformer encoders can attend to all positions simultaneously, enabling parallel computation and better long-range dependency modeling.

The core of a transformer encoder is the multi-head self-attention mechanism. For an input sequence, we compute three matrices: Query (Q), Key (K), and Value (V):

$$ Q = XW^Q, \quad K = XW^K, \quad V = XW^V $$

where \(X\) is the input embedding matrix and \(W^Q, W^K, W^V\) are learned weight matrices.

The attention output is computed as:

$$ \text{Attention}(Q, K, V) = \text{softmax}\left(\frac{QK^T}{\sqrt{d_k}}\right)V $$

where \(d_k\) is the dimension of the key vectors. The scaling factor \(\sqrt{d_k}\) prevents the dot products from becoming too large.

Multi-head attention performs this computation multiple times with different learned projections:

$$ \text{MultiHead}(Q, K, V) = \text{Concat}(\text{head}_1, …, \text{head}_h)W^O $$

$$ \text{head}_i = \text{Attention}(QW_i^Q, KW_i^K, VW_i^V) $$

Encoder layers structure

Each transformer encoder layer consists of two sub-layers:

- Multi-head self-attention: Allows each position to attend to all positions in the previous layer

- Position-wise feed-forward network: Applies two linear transformations with a ReLU activation:

$$ \text{FFN}(x) = \max(0, xW_1 + b_1)W_2 + b_2 $$

Both sub-layers use residual connections and layer normalization:

$$ \text{Output} = \text{LayerNorm}(x + \text{Sublayer}(x)) $$

Here’s a simplified transformer encoder implementation:

import torch

import torch.nn as nn

import math

class TransformerEncoderLayer(nn.Module):

def __init__(self, d_model, num_heads, d_ff, dropout=0.1):

super(TransformerEncoderLayer, self).__init__()

self.self_attention = nn.MultiheadAttention(d_model, num_heads, dropout=dropout)

self.feed_forward = nn.Sequential(

nn.Linear(d_model, d_ff),

nn.ReLU(),

nn.Linear(d_ff, d_model)

)

self.norm1 = nn.LayerNorm(d_model)

self.norm2 = nn.LayerNorm(d_model)

self.dropout = nn.Dropout(dropout)

def forward(self, x, mask=None):

# Self-attention with residual connection

attn_output, _ = self.self_attention(x, x, x, attn_mask=mask)

x = self.norm1(x + self.dropout(attn_output))

# Feed-forward with residual connection

ff_output = self.feed_forward(x)

x = self.norm2(x + self.dropout(ff_output))

return x

class TransformerEncoder(nn.Module):

def __init__(self, vocab_size, d_model, num_heads, d_ff, num_layers, max_seq_len):

super(TransformerEncoder, self).__init__()

self.embedding = nn.Embedding(vocab_size, d_model)

self.positional_encoding = self.create_positional_encoding(max_seq_len, d_model)

self.layers = nn.ModuleList([

TransformerEncoderLayer(d_model, num_heads, d_ff)

for _ in range(num_layers)

])

self.scale = math.sqrt(d_model)

def create_positional_encoding(self, max_seq_len, d_model):

pe = torch.zeros(max_seq_len, d_model)

position = torch.arange(0, max_seq_len).unsqueeze(1).float()

div_term = torch.exp(torch.arange(0, d_model, 2).float() *

-(math.log(10000.0) / d_model))

pe[:, 0::2] = torch.sin(position * div_term)

pe[:, 1::2] = torch.cos(position * div_term)

return pe.unsqueeze(0)

def forward(self, x):

seq_len = x.size(1)

# Embed and scale

x = self.embedding(x) * self.scale

# Add positional encoding

x = x + self.positional_encoding[:, :seq_len, :].to(x.device)

# Pass through encoder layers

for layer in self.layers:

x = layer(x)

return x

# Example usage

vocab_size = 10000

encoder = TransformerEncoder(

vocab_size=vocab_size,

d_model=512,

num_heads=8,

d_ff=2048,

num_layers=6,

max_seq_len=100

)

input_tokens = torch.randint(0, vocab_size, (2, 20)) # Batch 2, seq len 20

encoded = encoder(input_tokens)

print(f"Encoded output shape: {encoded.shape}") # [2, 20, 512]

Bidirectional encoding

One of the key advantages of transformer encoders is their ability to perform bidirectional encoding. Unlike left-to-right language models, bi-encoders can attend to context from both directions simultaneously. This bidirectional nature makes them particularly effective for understanding tasks like classification, named entity recognition, and question answering.

The bidirectional context allows the model to compute representations like:

$$ h_i = f(x_1, x_2, …, x_i, …, x_n) $$

where each position \(i\) has access to the entire sequence, not just preceding tokens.

4. Decoder architecture and variants

Autoregressive generation

Decoders generate sequences one token at a time in an autoregressive manner. At each step, the decoder predicts the next token based on all previously generated tokens:

$$ P(y_1, y_2, …, y_T | x) = \prod_{t=1}^{T} P(y_t | y_1, …, y_{t-1}, x) $$

This autoregressive property requires masking in the self-attention mechanism to prevent the decoder from “cheating” by looking at future tokens during training.

Masked self-attention

The decoder uses masked (or causal) self-attention to ensure that predictions for position (i) can only depend on known outputs at positions less than (i). The mask is typically implemented as:

$$ \text{Mask}_{i,j} = \begin{cases} 0 & \text{if } j \leq i \ -\infty & \text{if } j > i \end{cases} $$

When applied before the softmax in the attention mechanism, this mask effectively zeros out attention to future positions.

Cross-attention between encoder and decoder

The decoder’s cross-attention layer allows it to attend to the encoder’s output. This is where the decoder “looks at” the input sequence while generating output. The queries come from the decoder, while keys and values come from the encoder:

$$ \text{CrossAttention} = \text{softmax}\left(\frac{Q_{dec}K_{enc}^T}{\sqrt{d_k}}\right)V_{enc} $$

Here’s a complete encoder-decoder implementation:

import torch

import torch.nn as nn

class TransformerDecoderLayer(nn.Module):

def __init__(self, d_model, num_heads, d_ff, dropout=0.1):

super(TransformerDecoderLayer, self).__init__()

self.self_attention = nn.MultiheadAttention(d_model, num_heads, dropout=dropout)

self.cross_attention = nn.MultiheadAttention(d_model, num_heads, dropout=dropout)

self.feed_forward = nn.Sequential(

nn.Linear(d_model, d_ff),

nn.ReLU(),

nn.Linear(d_ff, d_model)

)

self.norm1 = nn.LayerNorm(d_model)

self.norm2 = nn.LayerNorm(d_model)

self.norm3 = nn.LayerNorm(d_model)

self.dropout = nn.Dropout(dropout)

def forward(self, x, encoder_output, tgt_mask=None, memory_mask=None):

# Masked self-attention

attn_output, _ = self.self_attention(x, x, x, attn_mask=tgt_mask)

x = self.norm1(x + self.dropout(attn_output))

# Cross-attention with encoder output

attn_output, _ = self.cross_attention(x, encoder_output, encoder_output,

attn_mask=memory_mask)

x = self.norm2(x + self.dropout(attn_output))

# Feed-forward

ff_output = self.feed_forward(x)

x = self.norm3(x + self.dropout(ff_output))

return x

class EncoderDecoderTransformer(nn.Module):

def __init__(self, src_vocab_size, tgt_vocab_size, d_model, num_heads,

d_ff, num_encoder_layers, num_decoder_layers, max_seq_len):

super(EncoderDecoderTransformer, self).__init__()

self.encoder = TransformerEncoder(

src_vocab_size, d_model, num_heads, d_ff,

num_encoder_layers, max_seq_len

)

self.tgt_embedding = nn.Embedding(tgt_vocab_size, d_model)

self.decoder_layers = nn.ModuleList([

TransformerDecoderLayer(d_model, num_heads, d_ff)

for _ in range(num_decoder_layers)

])

self.output_projection = nn.Linear(d_model, tgt_vocab_size)

self.d_model = d_model

def generate_square_subsequent_mask(self, sz):

mask = torch.triu(torch.ones(sz, sz), diagonal=1)

mask = mask.masked_fill(mask == 1, float('-inf'))

return mask

def forward(self, src, tgt):

# Encode source

encoder_output = self.encoder(src)

# Prepare target with mask

tgt_embedded = self.tgt_embedding(tgt) * math.sqrt(self.d_model)

tgt_mask = self.generate_square_subsequent_mask(tgt.size(1)).to(tgt.device)

# Decode

decoder_output = tgt_embedded

for layer in self.decoder_layers:

decoder_output = layer(decoder_output, encoder_output, tgt_mask=tgt_mask)

# Project to vocabulary

output = self.output_projection(decoder_output)

return output

# Example usage

model = EncoderDecoderTransformer(

src_vocab_size=10000,

tgt_vocab_size=8000,

d_model=512,

num_heads=8,

d_ff=2048,

num_encoder_layers=6,

num_decoder_layers=6,

max_seq_len=100

)

src = torch.randint(0, 10000, (2, 15)) # Source sequence

tgt = torch.randint(0, 8000, (2, 10)) # Target sequence

output = model(src, tgt)

print(f"Output shape: {output.shape}") # [2, 10, 8000]

5. Cross encoder vs bi-encoder architectures

Understanding cross encoders

A cross encoder processes two input sequences simultaneously, allowing rich interactions between them from the very first layer. Cross encoders concatenate or jointly process input pairs through the encoder, making them ideal for tasks requiring fine-grained comparison like semantic similarity, natural language inference, or question-answer matching.

In a cross encoder:

$$ \text{score} = f_{cross}([x_1; x_2]) $$

where \([x_1; x_2]\) represents the concatenated input and \(f_{cross}\) is the encoder function.

Bi-encoder approach

Bi-encoders, by contrast, encode each input independently and then compare the resulting embeddings. This architecture is more efficient for retrieval tasks because you can pre-compute and cache embeddings:

$$ e_1 = f_{enc}(x_1), \quad e_2 = f_{enc}(x_2) $$

$$ \text{score} = \text{similarity}(e_1, e_2) $$

where similarity could be cosine similarity, dot product, or Euclidean distance.

Here’s a comparison implementation:

import torch

import torch.nn as nn

import torch.nn.functional as F

class CrossEncoder(nn.Module):

def __init__(self, vocab_size, d_model, num_heads, num_layers):

super(CrossEncoder, self).__init__()

self.encoder = TransformerEncoder(

vocab_size, d_model, num_heads,

d_ff=d_model*4, num_layers=num_layers, max_seq_len=512

)

self.classifier = nn.Linear(d_model, 1)

def forward(self, input_ids):

# Process concatenated input

encoded = self.encoder(input_ids)

# Use [CLS] token (first token) for classification

cls_embedding = encoded[:, 0, :]

score = self.classifier(cls_embedding)

return score

class BiEncoder(nn.Module):

def __init__(self, vocab_size, d_model, num_heads, num_layers):

super(BiEncoder, self).__init__()

self.encoder = TransformerEncoder(

vocab_size, d_model, num_heads,

d_ff=d_model*4, num_layers=num_layers, max_seq_len=512

)

def encode(self, input_ids):

# Encode single input

encoded = self.encoder(input_ids)

# Use mean pooling

return encoded.mean(dim=1)

def forward(self, input_ids1, input_ids2):

# Encode both inputs separately

emb1 = self.encode(input_ids1)

emb2 = self.encode(input_ids2)

# Compute cosine similarity

similarity = F.cosine_similarity(emb1, emb2)

return similarity

# Example: Cross-encoder

cross_encoder = CrossEncoder(vocab_size=10000, d_model=256, num_heads=8, num_layers=6)

# Concatenated input: [CLS] text1 [SEP] text2 [SEP]

combined_input = torch.randint(0, 10000, (4, 50))

cross_score = cross_encoder(combined_input)

print(f"Cross-encoder score shape: {cross_score.shape}") # [4, 1]

# Example: Bi-encoder

bi_encoder = BiEncoder(vocab_size=10000, d_model=256, num_heads=8, num_layers=6)

text1 = torch.randint(0, 10000, (4, 25))

text2 = torch.randint(0, 10000, (4, 25))

bi_score = bi_encoder(text1, text2)

print(f"Bi-encoder similarity shape: {bi_score.shape}") # [4]

When to use each architecture

Use cross encoders when:

- Accuracy is more important than speed

- You have relatively few pairs to compare

- You need deep interaction between inputs (semantic textual similarity, natural language inference)

- Real-time retrieval isn’t required

Use bi-encoders when:

- You need to search through millions of documents

- You can pre-compute embeddings

- Inference speed is critical

- You’re building semantic search or retrieval systems

6. Practical applications and data preprocessing

Machine translation pipeline

Machine translation is the classic application of encoder decoder architecture. The encoder processes the source language text, and the decoder generates the target language translation. Modern translation systems use attention mechanisms to align words and phrases across languages.

A complete translation pipeline involves:

- Tokenization: Breaking text into subword units using techniques like Byte-Pair Encoding (BPE) or WordPiece

- Encoding: Processing source text through the encoder

- Decoding: Generating target text autoregressively

- Beam search: Exploring multiple translation hypotheses to find the best output

Data preprocessing techniques

Before feeding data into encoder decoder models, proper preprocessing is essential:

Label encoder: Converts categorical variables to integer labels. Useful for classification targets:

from sklearn.preprocessing import LabelEncoder

# Example: Encoding sentiment labels

label_encoder = LabelEncoder()

sentiments = ['positive', 'negative', 'neutral', 'positive', 'negative']

encoded_labels = label_encoder.fit_transform(sentiments)

print(f"Encoded: {encoded_labels}") # [2 1 0 2 1]

print(f"Classes: {label_encoder.classes_}") # ['negative' 'neutral' 'positive']

# Decoding back

decoded = label_encoder.inverse_transform([2, 1, 0])

print(f"Decoded: {decoded}") # ['positive' 'negative' 'neutral']

One hot encoder: Creates binary columns for each category. Useful when you don’t want to imply ordinal relationships:

from sklearn.preprocessing import OneHotEncoder

import numpy as np

# Example: One-hot encoding for language types

one_hot_encoder = OneHotEncoder(sparse=False)

languages = np.array(['English', 'Spanish', 'French', 'English']).reshape(-1, 1)

one_hot_encoded = one_hot_encoder.fit_transform(languages)

print(f"One-hot shape: {one_hot_encoded.shape}") # (4, 3)

print(f"First sample: {one_hot_encoded[0]}") # [1. 0. 0.]

Text encoder strategies

Modern text encoders use subword tokenization to handle vocabulary efficiently:

# Example: Simple BPE-style tokenizer

class SimpleTextEncoder:

def __init__(self, vocab):

self.vocab = vocab

self.token_to_id = {token: idx for idx, token in enumerate(vocab)}

self.id_to_token = {idx: token for idx, token in enumerate(vocab)}

def encode(self, text):

# Simple word-level encoding (in practice, use subword tokenization)

tokens = text.lower().split()

return [self.token_to_id.get(token, self.token_to_id['<UNK>'])

for token in tokens]

def decode(self, token_ids):

return ' '.join([self.id_to_token.get(idx, '<UNK>')

for idx in token_ids])

# Example usage

vocab = ['<PAD>', '<UNK>', '<SOS>', '<EOS>', 'hello', 'world', 'how', 'are', 'you']

encoder = SimpleTextEncoder(vocab)

text = "hello world how are you"

encoded = encoder.encode(text)

print(f"Encoded: {encoded}") # [4, 5, 6, 7, 8]

decoded = encoder.decode(encoded)

print(f"Decoded: {decoded}") # hello world how are you

Real-world applications

Summarization: Encoder-decoder models excel at abstractive summarization, where the encoder understands the full document and the decoder generates a concise summary.

Chatbots and dialogue systems: The encoder processes user input and conversation history, while the decoder generates contextually appropriate responses.

Code generation: GitHub Copilot and similar tools use encoder-decoder architectures to understand natural language descriptions and generate code.

Image captioning: While we’ve focused on text, encoder-decoder architectures also work with vision encoders (CNNs or Vision Transformers) paired with text decoders to generate image descriptions.

7. Conclusion

The encoder decoder architecture represents one of the most versatile and powerful patterns in modern AI. By separating the understanding phase (encoder) from the generation phase (decoder), this architecture elegantly handles sequence-to-sequence tasks across diverse domains. Whether you’re working with transformer encoders for deep bidirectional understanding, cross encoders for precise similarity scoring, or full encoder-decoder models for translation and summarization, mastering these concepts is essential for building sophisticated natural language processing systems.

As you implement these architectures in your own projects, remember that the attention mechanism is the key innovation that makes modern encoder-decoder models so effective. The ability to selectively focus on relevant parts of the input, whether through self-attention in encoders or cross-attention between encoder and decoder, enables models to capture complex relationships and generate high-quality outputs. With the code examples and mathematical foundations provided in this guide, you’re well-equipped to build and customize encoder decoder architectures for your specific AI applications.

8. Knowledge Check

Quiz 1: Foundational Concepts

Quiz 2: The Decoder’s Role

Quiz 3: Sequence-to-Sequence (Seq2Seq) Architecture

Quiz 4: The Attention Mechanism

Quiz 5: Transformer Encoder Core

Quiz 6: Bidirectional Encoding

Quiz 7: Decoder Self-Attention

i only depends on the known outputs at positions before i.