Multi-layer perceptron (MLP): Architecture and applications

The multi layer perceptron represents one of the most fundamental building blocks in modern machine learning and artificial intelligence. As a type of feedforward neural network, the multilayer perceptron has revolutionized how we approach complex pattern recognition, classification, and regression tasks. Whether you’re building recommendation systems, image classifiers, or predictive models, understanding the perceptron neural network architecture is essential for any AI practitioner.

In this comprehensive guide, we’ll explore everything from the basic perceptron model to advanced MLP implementations, covering both theoretical foundations and practical applications using Python.

Content

Toggle1. Understanding the perceptron: From single to multi-layer

The perceptron algorithm: A historical perspective

The perceptron algorithm, introduced by Frank Rosenblatt, marked the birth of neural networks. A single layer perceptron is the simplest form of artificial neural network, consisting of input nodes, weights, and a single output node. It works by computing a weighted sum of inputs and applying an activation function to produce an output.

The mathematical representation of a single perceptron is:

$$y = f\left(\sum_{i=1}^{n} w_i x_i + b\right)$$

Where:

- \(x_i\) represents input features

- \(w_i\) represents weights

- \(b\) is the bias term

- \(f\) is the activation function

Here’s a simple implementation of a single perceptron in Python:

import numpy as np

class SinglePerceptron:

def __init__(self, learning_rate=0.01, epochs=100):

self.learning_rate = learning_rate

self.epochs = epochs

self.weights = None

self.bias = None

def activation(self, x):

"""Step activation function"""

return 1 if x >= 0 else 0

def fit(self, X, y):

n_samples, n_features = X.shape

self.weights = np.zeros(n_features)

self.bias = 0

for _ in range(self.epochs):

for idx, x_i in enumerate(X):

linear_output = np.dot(x_i, self.weights) + self.bias

y_predicted = self.activation(linear_output)

# Update weights and bias

update = self.learning_rate * (y[idx] - y_predicted)

self.weights += update * x_i

self.bias += update

def predict(self, X):

linear_output = np.dot(X, self.weights) + self.bias

return np.array([self.activation(x) for x in linear_output])

Limitations of single layer perceptrons

While the single layer perceptron can solve linearly separable problems, it faces significant limitations. The famous XOR problem demonstrates this constraint—a single perceptron cannot classify XOR patterns because they’re not linearly separable. This limitation led researchers to develop the multi-layer perceptron architecture.

The multi-layer perceptron architecture

The multilayer perceptron overcomes the limitations of single perceptrons by introducing hidden layers between input and output layers. This architecture enables the network to learn non-linear relationships and solve complex problems. The key components of an MLP include:

- Input layer: Receives the raw features

- Hidden layers: One or more layers that transform inputs through weighted connections

- Output layer: Produces final predictions

- Activation functions: Introduce non-linearity at each layer

- Weights and biases: Learnable parameters connecting neurons

2. MLP architecture and mathematical foundations

Layer-by-layer computation

In a multi-layer perceptron, information flows forward through the network. For a network with one hidden layer, the computation proceeds as follows:

Hidden layer activation:

$$h = f_1(W_1 \cdot x + b_1)$$

Output layer activation:

$$\hat{y} = f_2(W_2 \cdot h + b_2)$$

Where:

- \(W_1, W_2\) are weight matrices

- \(b_1, b_2\) are bias vectors

- \(f_1, f_2\) are activation functions

- \(h\) is the hidden layer output

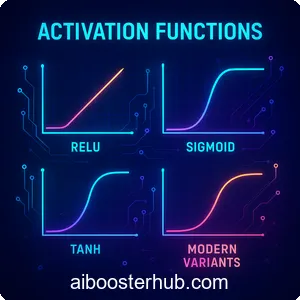

Activation functions in MLPs

Activation functions are crucial for enabling perceptrons to learn complex patterns. Common activation functions include:

ReLU (Rectified Linear Unit):

$$f(x) = \max(0, x)$$

Sigmoid:

$$f(x) = \frac{1}{1 + e^{-x}}$$

Tanh:

$$f(x) = \frac{e^x – e^{-x}}{e^x + e^{-x}}$$

Softmax (for multi-class output):

$$f(x_i) = \frac{e^{x_i}}{\sum_{j} e^{x_j}}$$

Backpropagation and gradient descent

The MLP model learns by adjusting weights through backpropagation. This algorithm computes gradients of the loss function with respect to each weight by applying the chain rule. The weight update rule follows:

$$w_{new} = w_{old} – \eta \frac{\partial L}{\partial w}$$

Where \(\eta\) is the learning rate and \(L\) is the loss function.

For classification tasks, we typically use cross-entropy loss:

$$L = -\sum_{i} y_i \log(\hat{y}_i)$$

For regression tasks, mean squared error is common:

$$L = \frac{1}{n}\sum_{i=1}^{n}(y_i – \hat{y}_i)^2$$

3. MLP classifier: Building classification models

Implementing an MLP classifier

The mlp classifier is widely used for classification tasks. Let’s build a complete example using scikit-learn for a real-world classification problem:

from sklearn.neural_network import MLPClassifier

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import accuracy_score, classification_report

import numpy as np

# Generate synthetic classification data

X, y = make_classification(n_samples=1000, n_features=20,

n_informative=15, n_redundant=5,

n_classes=3, random_state=42)

# Split the data

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, random_state=42

)

# Normalize features

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test)

# Create and train MLP classifier

mlp_clf = MLPClassifier(

hidden_layer_sizes=(100, 50), # Two hidden layers

activation='relu',

solver='adam',

max_iter=500,

learning_rate_init=0.001,

random_state=42

)

mlp_clf.fit(X_train_scaled, y_train)

# Make predictions

y_pred = mlp_clf.predict(X_test_scaled)

# Evaluate the model

accuracy = accuracy_score(y_test, y_pred)

print(f"Accuracy: {accuracy:.4f}")

print("\nClassification Report:")

print(classification_report(y_test, y_pred))

Hyperparameter tuning for MLP classifiers

The performance of an mlp classifier depends heavily on hyperparameter selection. Key hyperparameters include:

Hidden layer sizes: Determines the network depth and width. For example, (100, 50, 25) creates three hidden layers with 100, 50, and 25 neurons respectively.

Learning rate: Controls the step size during optimization. Too high leads to instability, too low results in slow convergence.

Batch size: The number of samples processed before updating weights. Smaller batches provide more frequent updates but with noisier gradients.

Regularization: L2 penalty (alpha parameter) prevents overfitting by penalizing large weights.

Here’s an example of hyperparameter tuning using grid search:

from sklearn.model_selection import GridSearchCV

# Define parameter grid

param_grid = {

'hidden_layer_sizes': [(50,), (100,), (100, 50), (100, 50, 25)],

'activation': ['relu', 'tanh'],

'learning_rate_init': [0.001, 0.01],

'alpha': [0.0001, 0.001, 0.01]

}

# Create grid search

grid_search = GridSearchCV(

MLPClassifier(max_iter=500, random_state=42),

param_grid,

cv=5,

scoring='accuracy',

n_jobs=-1

)

# Fit grid search

grid_search.fit(X_train_scaled, y_train)

print("Best parameters:", grid_search.best_params_)

print("Best cross-validation score:", grid_search.best_score_)

Real-world classification example: Iris dataset

Let’s implement a complete MLP classifier for the famous Iris flower classification problem:

from sklearn.datasets import load_iris

import matplotlib.pyplot as plt

# Load iris dataset

iris = load_iris()

X, y = iris.data, iris.target

# Split and scale

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.3, random_state=42

)

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test)

# Train MLP

mlp_iris = MLPClassifier(

hidden_layer_sizes=(10, 5),

activation='relu',

solver='adam',

max_iter=1000,

random_state=42

)

mlp_iris.fit(X_train_scaled, y_train)

# Evaluate

train_accuracy = mlp_iris.score(X_train_scaled, y_train)

test_accuracy = mlp_iris.score(X_test_scaled, y_test)

print(f"Training Accuracy: {train_accuracy:.4f}")

print(f"Testing Accuracy: {test_accuracy:.4f}")

4. MLP regressor: Solving regression problems

Understanding the MLPRegressor

The mlpregressor applies the multi-layer perceptron architecture to regression tasks, where the goal is to predict continuous values rather than discrete classes. The primary difference lies in the output layer activation (typically linear) and the loss function (usually mean squared error).

Implementing regression with MLP

Here’s a comprehensive example of using MLPs for regression:

from sklearn.neural_network import MLPRegressor

from sklearn.datasets import make_regression

from sklearn.metrics import mean_squared_error, r2_score

import matplotlib.pyplot as plt

# Generate synthetic regression data

X, y = make_regression(

n_samples=1000,

n_features=10,

n_informative=8,

noise=10,

random_state=42

)

# Split data

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, random_state=42

)

# Scale features

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test)

# Create and train MLP regressor

mlp_reg = MLPRegressor(

hidden_layer_sizes=(100, 50, 25),

activation='relu',

solver='adam',

learning_rate_init=0.001,

max_iter=1000,

random_state=42

)

mlp_reg.fit(X_train_scaled, y_train)

# Make predictions

y_pred = mlp_reg.predict(X_test_scaled)

# Evaluate

mse = mean_squared_error(y_test, y_pred)

r2 = r2_score(y_test, y_pred)

print(f"Mean Squared Error: {mse:.4f}")

print(f"R² Score: {r2:.4f}")

# Visualize predictions

plt.figure(figsize=(10, 6))

plt.scatter(y_test, y_pred, alpha=0.5)

plt.plot([y_test.min(), y_test.max()],

[y_test.min(), y_test.max()],

'r--', lw=2)

plt.xlabel('Actual Values')

plt.ylabel('Predicted Values')

plt.title('MLP Regressor: Actual vs Predicted')

plt.show()

Advanced regression example: Housing price prediction

Let’s create a more realistic example predicting house prices:

from sklearn.datasets import fetch_california_housing

# Load California housing dataset

housing = fetch_california_housing()

X, y = housing.data, housing.target

# Split and preprocess

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, random_state=42

)

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test)

# Build MLP regressor with early stopping

mlp_housing = MLPRegressor(

hidden_layer_sizes=(150, 100, 50),

activation='relu',

solver='adam',

learning_rate_init=0.001,

max_iter=500,

early_stopping=True,

validation_fraction=0.1,

random_state=42

)

# Train the model

mlp_housing.fit(X_train_scaled, y_train)

# Predictions and evaluation

y_pred_train = mlp_housing.predict(X_train_scaled)

y_pred_test = mlp_housing.predict(X_test_scaled)

print("Training Set Performance:")

print(f"MSE: {mean_squared_error(y_train, y_pred_train):.4f}")

print(f"R²: {r2_score(y_train, y_pred_train):.4f}")

print("\nTest Set Performance:")

print(f"MSE: {mean_squared_error(y_test, y_pred_test):.4f}")

print(f"R²: {r2_score(y_test, y_pred_test):.4f}")

5. MLP in machine learning: Practical considerations

Feature engineering for MLPs

While mlp machine learning models can learn complex patterns, proper feature engineering significantly improves performance. Key considerations include:

Feature scaling: MLPs are sensitive to feature scales. Always normalize or standardize features:

from sklearn.preprocessing import MinMaxScaler, StandardScaler

# Standardization (zero mean, unit variance)

scaler = StandardScaler()

X_standardized = scaler.fit_transform(X)

# Normalization (scale to [0, 1])

normalizer = MinMaxScaler()

X_normalized = normalizer.fit_transform(X)

Handling categorical variables: Convert categorical features to numerical representations:

from sklearn.preprocessing import OneHotEncoder

import pandas as pd

# Example with mixed data types

data = pd.DataFrame({

'color': ['red', 'blue', 'green', 'red'],

'size': [10, 20, 15, 12],

'price': [100, 200, 150, 120]

})

# One-hot encode categorical variables

encoder = OneHotEncoder(sparse=False, drop='first')

color_encoded = encoder.fit_transform(data[['color']])

# Combine with numerical features

X_processed = np.hstack([color_encoded, data[['size', 'price']].values])

Preventing overfitting

The perceptron model, especially with many layers, can easily overfit training data. Strategies to prevent overfitting include:

Early stopping: Monitor validation loss and stop training when it stops improving:

mlp = MLPClassifier(

hidden_layer_sizes=(100, 50),

early_stopping=True,

validation_fraction=0.2,

n_iter_no_change=10, # Stop after 10 iterations without improvement

random_state=42

)

Dropout: Although not directly available in scikit-learn’s MLP, dropout is crucial in deep learning frameworks.

L2 regularization: Penalize large weights using the alpha parameter:

mlp_regularized = MLPClassifier(

hidden_layer_sizes=(100, 50),

alpha=0.01, # L2 regularization strength

random_state=42

)

Training monitoring and visualization

Monitoring the training process helps diagnose issues:

import matplotlib.pyplot as plt

# Train MLP and track loss

mlp = MLPClassifier(

hidden_layer_sizes=(100, 50),

max_iter=500,

random_state=42

)

mlp.fit(X_train_scaled, y_train)

# Plot loss curve

plt.figure(figsize=(10, 6))

plt.plot(mlp.loss_curve_)

plt.xlabel('Iteration')

plt.ylabel('Loss')

plt.title('MLP Training Loss Curve')

plt.grid(True)

plt.show()

6. Building a custom MLP from scratch

Complete MLP implementation

Understanding the mlp meaning requires building one from scratch. Here’s a complete implementation using only NumPy:

import numpy as np

class CustomMLP:

def __init__(self, layer_sizes, learning_rate=0.01, epochs=100):

"""

Initialize MLP with specified architecture

layer_sizes: list of integers (e.g., [784, 128, 64, 10])

"""

self.layer_sizes = layer_sizes

self.learning_rate = learning_rate

self.epochs = epochs

self.weights = []

self.biases = []

# Initialize weights and biases

for i in range(len(layer_sizes) - 1):

w = np.random.randn(layer_sizes[i], layer_sizes[i+1]) * 0.01

b = np.zeros((1, layer_sizes[i+1]))

self.weights.append(w)

self.biases.append(b)

def relu(self, x):

"""ReLU activation function"""

return np.maximum(0, x)

def relu_derivative(self, x):

"""Derivative of ReLU"""

return (x > 0).astype(float)

def softmax(self, x):

"""Softmax activation for output layer"""

exp_x = np.exp(x - np.max(x, axis=1, keepdims=True))

return exp_x / np.sum(exp_x, axis=1, keepdims=True)

def forward(self, X):

"""Forward propagation"""

self.activations = [X]

self.z_values = []

for i in range(len(self.weights) - 1):

z = np.dot(self.activations[-1], self.weights[i]) + self.biases[i]

self.z_values.append(z)

a = self.relu(z)

self.activations.append(a)

# Output layer

z = np.dot(self.activations[-1], self.weights[-1]) + self.biases[-1]

self.z_values.append(z)

a = self.softmax(z)

self.activations.append(a)

return self.activations[-1]

def backward(self, X, y):

"""Backpropagation"""

m = X.shape[0]

# Convert y to one-hot encoding

y_onehot = np.zeros((m, self.layer_sizes[-1]))

y_onehot[np.arange(m), y] = 1

# Output layer gradient

dz = self.activations[-1] - y_onehot

# Initialize gradient lists

dw = []

db = []

# Backpropagate through layers

for i in range(len(self.weights) - 1, -1, -1):

dw_i = np.dot(self.activations[i].T, dz) / m

db_i = np.sum(dz, axis=0, keepdims=True) / m

dw.insert(0, dw_i)

db.insert(0, db_i)

if i > 0:

dz = np.dot(dz, self.weights[i].T) * self.relu_derivative(self.z_values[i-1])

return dw, db

def update_parameters(self, dw, db):

"""Update weights and biases"""

for i in range(len(self.weights)):

self.weights[i] -= self.learning_rate * dw[i]

self.biases[i] -= self.learning_rate * db[i]

def fit(self, X, y):

"""Train the MLP"""

for epoch in range(self.epochs):

# Forward pass

output = self.forward(X)

# Backward pass

dw, db = self.backward(X, y)

# Update parameters

self.update_parameters(dw, db)

# Calculate loss

if epoch % 10 == 0:

loss = -np.mean(np.log(output[np.arange(len(y)), y] + 1e-8))

accuracy = np.mean(np.argmax(output, axis=1) == y)

print(f"Epoch {epoch}: Loss = {loss:.4f}, Accuracy = {accuracy:.4f}")

def predict(self, X):

"""Make predictions"""

output = self.forward(X)

return np.argmax(output, axis=1)

# Example usage

if __name__ == "__main__":

# Generate sample data

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

X, y = make_classification(n_samples=1000, n_features=20,

n_informative=15, n_classes=3,

random_state=42)

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, random_state=42

)

# Normalize

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_test = scaler.transform(X_test)

# Train custom MLP

mlp = CustomMLP(layer_sizes=[20, 64, 32, 3], learning_rate=0.1, epochs=100)

mlp.fit(X_train, y_train)

# Test

predictions = mlp.predict(X_test)

accuracy = np.mean(predictions == y_test)

print(f"\nFinal Test Accuracy: {accuracy:.4f}")

7. Advanced topics and best practices

Comparing MLPs with other algorithms

The feedforward neural network architecture of MLPs offers unique advantages and disadvantages compared to other machine learning algorithms:

MLPs vs Decision Trees:

- MLPs excel with continuous features and complex non-linear relationships

- Decision trees are more interpretable but can overfit easily

- MLPs require more data and computational resources

MLPs vs Support Vector Machines:

- MLPs scale better to high-dimensional data

- SVMs with kernel tricks can achieve similar non-linear capabilities

- MLPs are more flexible for multi-output problems

Choosing architecture depth and width

Determining the optimal number of layers and neurons is crucial:

Deep vs Wide networks:

- Deeper networks (more layers) learn hierarchical features

- Wider networks (more neurons per layer) increase capacity within each layer

- Start simple and gradually increase complexity

Rule of thumb:

- For classification: hidden layer size between input and output size

- Multiple smaller layers often outperform single large layers

- Use validation performance to guide architecture decisions

# Example: Architecture search

architectures = [

(50,),

(100,),

(50, 25),

(100, 50),

(100, 50, 25),

(150, 100, 50)

]

best_score = 0

best_arch = None

for arch in architectures:

mlp = MLPClassifier(

hidden_layer_sizes=arch,

max_iter=500,

random_state=42

)

mlp.fit(X_train_scaled, y_train)

score = mlp.score(X_test_scaled, y_test)

print(f"Architecture {arch}: Score = {score:.4f}")

if score > best_score:

best_score = score

best_arch = arch

print(f"\nBest architecture: {best_arch} with score: {best_score:.4f}")

Common pitfalls and solutions

Vanishing gradients: In deep networks, gradients can become very small. Solutions include:

- Use ReLU activation instead of sigmoid

- Implement batch normalization

- Use residual connections for very deep networks

Convergence issues: The model doesn’t learn effectively. Solutions:

- Adjust learning rate (try different values: 0.001, 0.01, 0.1)

- Increase training iterations

- Try different solvers (adam, sgd, lbfgs)

- Check feature scaling

Overfitting: Model performs well on training but poorly on test data:

- Increase regularization (alpha parameter)

- Reduce network complexity

- Add more training data

- Implement early stopping

8. Knowledge Check

Quiz 1: The Original Perceptron

Quiz 2: Single-Layer Perceptron Limitations

Quiz 3: Core MLP Architecture

Quiz 4: The Role of Activation Functions

Quiz 5: The Learning Mechanism

Quiz 6: Classifier vs. Regressor

Quiz 7: Key Hyperparameters

Quiz 8: Overfitting Prevention

n_iter_no_change parameter specifies how many epochs to wait without improvement before stopping.Quiz 9: Data Preprocessing

StandardScaler) and Normalization (using MinMaxScaler).